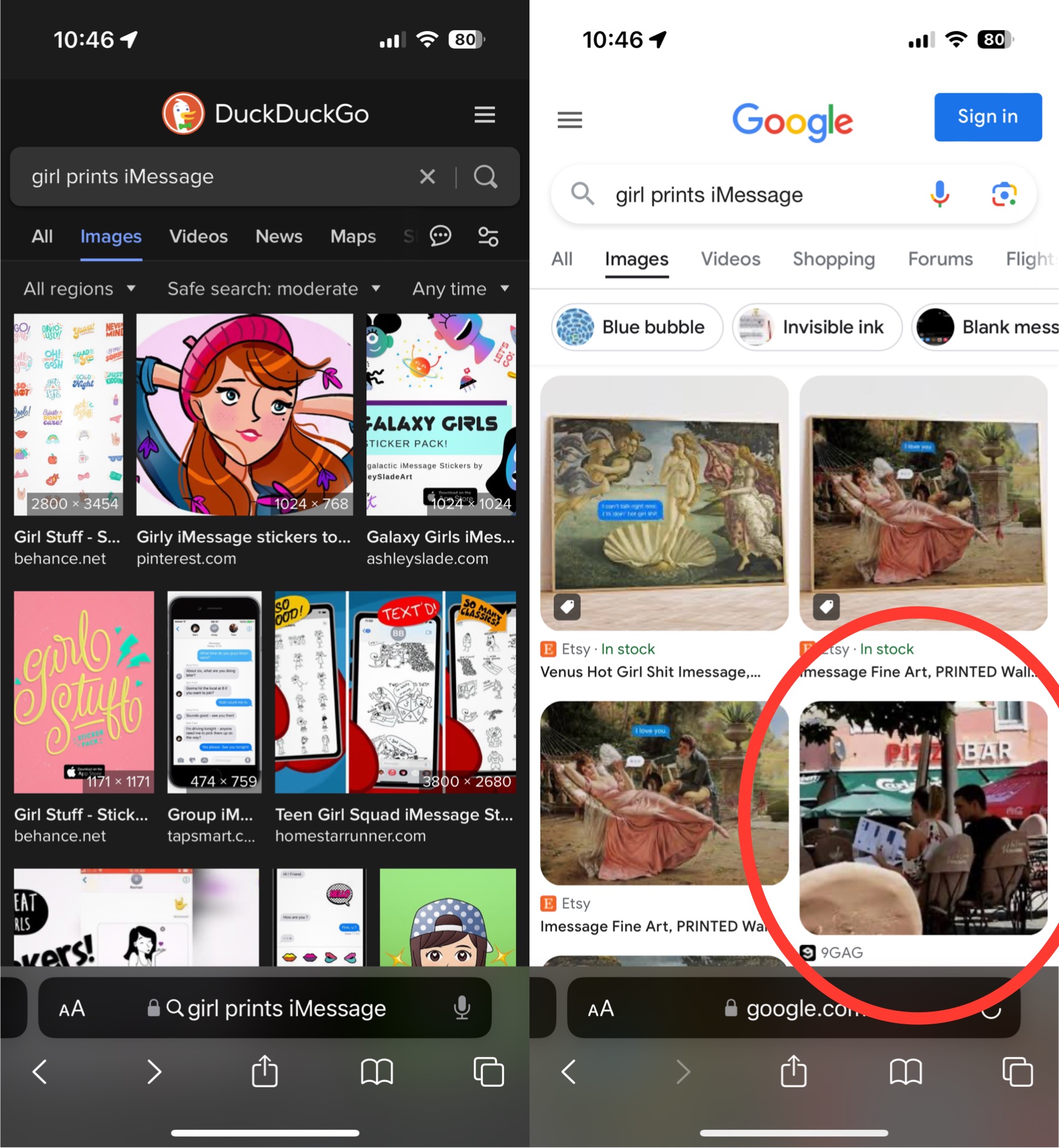

I always try to replicate these results, because the majority of them are fake. For this one in particular I don’t get any AI results, which is interesting, but inconclusive

How would you expect to recreate them when the models are given random perturbations such that the results usually vary?

The point here is that this is likely another fake image, meant to get the attention of people who quickly engage with everything anti AI. Google does not generate an AI response to this query, which I only know because I attempted to recreate it. Instead of blindly taking everything you agree with at face value, it can behoove you to question it and test it out yourself.

Google is well known to do A/B testing, meaning you might not get a particular response (or even whole sets of results generated via different algorithms they are testing) even if your neighbor searches for the same thing.

So again, I ask how your anecdotal evidence somehow invalidates other anecdotal evidence? If your evidence isn’t anecdotal, I am very interested in your results.

Otherwise, what you’re saying has the same or less value than the example.

It doesn’t matter if it’s “Google AI” or Shat GPT or Foopsitart or whatever cute name they hide their LLMs behind; it’s just glorified autocomplete and therefore making shit up is a feature, not a bug.

Making shit up IS a feature of LLMs. It’s crazy to use it as search engine. Now they’ll try to stop it from hallucinating to make it a better search engine and kill the one thing it’s good at …

Maybe they should branch it off. Have one for making shit up purposes and one for search engine. I haven’t found the need for one that makes shit up but have gotten value using them to search. Especially with Google going to shit and so many websites being terribly designed and hard to navigate.

Chatgpt was in much higher quality a year ago than it is now.

It could be very accurate. Now it’s hallucinating the whole time.

I was thinking the same thing. LLMs have suddenly got much worse. They’ve lost the plot lmao

That’s because of the concerted effort to sabotage LLMs by poisoning their data.

I’m not sure thats definitely true… my sense is that the AI money/arms race has made them push out new/more as fast as possible so they can be the first and get literally billions of investment capitol

Maybe. I’m sure there’s more than one reason. But the negativity people have for AI is really toxic.

Being critical of something is not “toxic”.

People aren’t being critical. At least most are. They’re just being haters tbh. But we can argue this till the cows come home, and it’s not gonna change either of our minds, so let’s just not.

is it?

nearly everyone I speak to about it (other than one friend I have who’s pretty far on the spectrum) concur that no one asked for this. few people want any of it, its consuming vast amounts of energy, is being shoehorned into programs like skype and adobe reader where no one wants it, is very, very soon to become manditory in OS’s like windows, iOS and Android while it threatens election integrity already (mosdt notibly India) and is being used to harass individuals with deepfake porn etc.

the ethics board at openAI got essentially got dispelled and replaced by people interested only in the fastest expansion and rollout possible to beat the competition and maximize their capitol gains…

…also AI “art”, which is essentially taking everything a human has ever made, shredding it into confetti and reconsstructing it in the shape of something resembling the prompt is starting to flood Image search with its grotesque human-mimicing outputs like things with melting, split pupils and 7 fingers…

you’re saying people should be positive about all this?

You’re cherry picking the negative points only, just to lure me into an argument. Like all tech, there’s definitely good and bad. Also, the fact that you’re implying you need to be “pretty far on the spectrum” to think this is good is kinda troubling.

The only people poisoning the data set are the makers who insist on using Reddit content

But how do we pin it on Biden?

I don’t bother using things like Copilot or other AI tools like ChatGPT. I mean, they’re pretty cool what they CAN give you correctly and the new demo floored me in awe.

But, I prefer just using the image generators like DALL E and Diffusion to make funny images or a new profile picture on steam.

But this example here? Good god I hope this doesn’t become the norm…

This is definitely different from using Dall-E to make funny images. I’m on a thread in another forum that is (mostly) dedicated to AI images of Godzilla in silly situations and doing silly things. No one is going to take any advice from that thread apart from “making Godzilla do silly things is amusing and worth a try.”

These text generation LLM are good for text generating. I use it to write better emails or listings or something.

I had to do a presentation for work a few weeks ago. I asked co-pilot to generate me an outline for a presentation on the topic.

It spat out a heading and a few sections with details on each. It was generic enough, but it gave me the structure I needed to get started.

I didn’t dare ask it for anything factual.

Worked a treat.

You can ask these LLMs to continue filling out the outline too. They just generate a bunch of generic points and you can erase or fill in the details.

That’s how I used it to write cover letters for job applications. I feed it my resume and the job listing and it puts something together. I’ve got to do a lot of editing and sometimes it just makes up experience, but it’s faster than trying to write it myself.

Just don’t use google

Why people still use it is beyond me.

Because Google has literally poisoned the internet to be the de facto SEO optimization goal. Even if Google were to suddenly disappear, everything is so optimized forngoogle’s algorithm that any replacements are just going to favor the SEO already done by everyone.

The abusive adware company can still sometimes kill it with vague searches.

(Still too lazy to properly catalog the daily occurrences such as above.)

SearXNG proxying Google still isn’t as good sometimes for some reason (maybe search bubbling even in private browsing w/VPN). Might pay for search someday to avoid falling back to Google.

Why do we call it hallucinating? Call it what it is: lying. You want to be more “nice” about it: fabricating. “Google’s AI is fabricating more lies. No one dead… yet.”

To be fair, they call it a hallucination because hallucinations don’t have intent behind them.

LLMs don’t have any intent. Period.

A purposeful lie requires an intent to lie.

Without any intent, it’s not a lie.

I agree that “fabrication” is probably a better word for it, especially because it implies the industrial computing processes required to build these fabrications. It allows the word fabrication to function as a double entendre: It has been fabricated by industrial processes, and it is a fabrication as in a false idea made from nothing.

LLM’s may not have any intent, but companies do. In this case, Google decides to present the AI answer on top of the regular search answers, knowing that AI can make stuff up. MAybe the AI isn’t lying, but Google definitely is. Even with the “everything is experimental, learn more” line, because they’d just give the information if they’d really want you to learn more, instead of making you have to click again for it.

In other words, I agree with your assessment here. The petty abject attempts by all these companies to produce the world’s first real “Jarvis” are all couched in “they didn’t stop to think if they should.”

My actual opnion is that they don’t want to think if they should, because they know the answer. The pressure to go public with a shitty model outweighs the responsibility to the people relying on the search results.

It is difficult to get a man to understand something when his salary depends on his not understanding it.

-Upton Sinclair

Sadly, same as it ever was. You are correct, they already know the answer, so they don’t want to consider the question.

There’s also the argument that “if we don’t do it, somebody else would,” and I kind of understand that, while I also disagree with it.

I did look up an article about it that basically said the same thing, and while I get “lie” implies malicious intent, I agree with you that fabricate is better than hallucinating.

The most damning thing to call it is “inaccurate”. Nothing will drive the average person away from a companies information gathering products faster than associating it with being inaccurate more times than not. That is why they are inventing different things to call it. It sounds less bad to say “my LLM hallucinates sometimes” than it does to say “my LLM is inaccurate sometimes“.

It’s not lying or hallucinating. It’s describing exactly what it found in search results. There’s an web page with that title from that date. Now the problem is that the web page is pinterest and the title is the result of aggressive SEO. These types of SEO practices are what made Google largely useless for the past several years and an AI that is based on these useless results will be just as useless.

Because lies require intent to deceive, which the AI cannot have.

They merely predict the most likely thing that should next be said, so “hallucinations” is a fairly accurate description

How do you guys get these AI things? I don’t have such a thing when I search using Google.

Gmail has something like it too with the summary bit at the top of Amazon order emails. Had one the other day that said I ordered 2 new phones, which freaked me out. It’s because there were ads to phones in the order receipt email.

IIRC Amazon emails specifically don’t mention products that you’ve ordered in their emails to avoid Google being able to scrape product and order info from them for their own purposes via Gmail.

I probably have it blocked somewhere on my desktop, because it never happens on my desktop, but it happens on my Pixel 4a pretty regularly.

&udm=14 baybee

I believe it’s US-only for now

Thank god

Well to be fair the OP has the date shown in the image as Apr 23, and Google has been frantically changing the way the tool works on a regular basis for months, so there’s a chance they resolved this insanity in the interim. The post itself is just ragebait.

*not to say that Google isn’t doing a bunch of dumb shit lately, I just don’t see this particular post from over a month ago as being as rage inducing as some others in the community.

I get them pretty regularly using the Google search app on my android.

Again, as a chatgpt pro user… what the fuck is google doing to fuck up this bad.

This is so comically bad i almost have to assume its on purpose? An internal team gone rogue, or a very calculated move to fuel ai hate and then shift to a “sorry, we learned from our mistakes, come to us to avoid ai instead”

I think it’s because what Google is doing is just ChatGPT with extra steps. Instead of just letting the AI generate answers based on curated training data, they trained it and then gave it a mission to summarize the contents of their list of unreliable sources.

I wonder if all these companies rolling out AI before it’s ready will have a widespread impact on how people perceive AI. If you learn early on that AI answers can’t be trusted will people be less likely to use it, even if it improves to a useful point?

will have a widespread impact on how people perceive AI

Here’s hoping.

If so, companies rolling out blatantly wrong AI are doing the world a service and protecting us against subtly wrong AI

Google were the good guys after all???

To be fair, you should fact check everything you read on the internet, no matter the source (though I admit that’s getting more difficult in this era of shitty search engines). AI can be a very powerful knowledge-acquiring tool if you take everything it tells you with a grain of salt, just like with everything else.

This is one of the reasons why I only use AI implementations that cite their sources (edit: not Google’s), cause you can just check the source it used and see for yourself how much is accurate, and how much is hallucinated bullshit. Hell, I’ve had AI cite an AI generated webpage as its source on far too many occasions.

Going back to what I said at the start, have you ever read an article or watched a video on a subject you’re knowledgeable about, just for fun to count the number of inaccuracies in the content? Real eye-opening shit. Even before the age of AI language models, misinformation was everywhere online.

Personally, that’s exactly what’s happening to me. I’ve seen enough that AI can’t be trusted to give a correct answer, so I don’t use it for anything important. It’s a novelty like Siri and Google Assistant were when they first came out (and honestly still are) where the best use for them is to get them to tell a joke or give you very narrow trivia information.

There must be a lot of people who are thinking the same. AI currently feels unhelpful and wrong, we’ll see if it just becomes another passing fad.

I’m no defender of AI and it just blatantly making up fake stories is ridiculous. However, in the long term, as long as it does eventually get better, I don’t see this period of low to no trust lasting.

Remember how bad autocorrect was when it first rolled out? people would always be complaining about it and cracking jokes about how dumb it is. then it slowly got better and better and now for the most part, everyone just trusts their phones to fix any spelling mistakes they make, as long as it’s close enough.

I wish we could really press the main point here: Google is willfully foisting their LLM on the public, and presenting it as a useful tool. It is not, which makes them guilty of neglicence and fraud.

Pichai needs to end up in jail and Google broken up into at least ten companies.

Maybe they actually hate the idea of LLMs and are trying to sour the public’s opinion on it to kill it.

I mean LLMs are not to get exact information. Do people ever read on the stuff they use?

Theoretically, what would the utility of AI summaries in Google Search if not getting exact information?

Steering your eyes toward ads, of course, what a silly question.

This feels like something you should go tell Google about rather than the rest of us. They’re the ones who have embedded LLM-generated answers to random search queries.

Of course you should not trust everything you see on the internet.

Be cautious and when you see something suspicious do a google search to find more reliable sources.

Oh … Wait !

Could this be grounds for CVS to sue Google? Seems like this could harm business if people think CVS products are less trustworthy. And Google probably can’t find behind section 230 since this is content they are generating but IANAL.

Iirc cases where the central complaint is AI, ML, or other black box technology, the company in question was never held responsible because “We don’t know how it works”. The AI surge we’re seeing now is likely a consequence of those decisions and the crypto crash.

I’d love CVS try to push a lawsuit though.

In Canada there was a company using an LLM chatbot who had to uphold a claim the bot had made to one of their customers. So there’s precedence for forcing companies to take responsibility for what their LLMs says (at least if they’re presenting it as trustworthy and representative)

This was with regards to Air Canada and its LLM that hallucinated a refund policy, which the company argued they did not have to honour because it wasn’t their actual policy and the bot had invented it out of nothing.

An important side note is that one of the cited reasons that the Court ruled in favour of the customer is because the company did not disclose that the LLM wasn’t the final say in its policy, and that a customer should confirm with a representative before acting upon the information. This meaning that the the legal argument wasn’t “the LLM is responsible” but rather “the customer should be informed that the information may not be accurate”.

I point this out because I’m not so sure CVS would have a clear cut case based on the Air Canada ruling, because I’d be surprised if Google didn’t have some legalese somewhere stating that they aren’t liable for what the LLM says.

Yeah the legalise happens to be in the back pocket of sundar pichai. ???

But those end up being the same in practice. If you have to put up a disclaimer that the info might be wrong, then who would use it? I can get the wrong answer or unverified heresay anywhere. The whole point of contacting the company is to get the right answer; or at least one the company is forced to stick to.

This isn’t just minor AI growing pains, this is a fundamental problem with the technology that causes it to essentially be useless for the use case of “answering questions”.

They can slap as many disclaimers as they want on this shit; but if it just hallucinates policies and incorrect answers it will just end up being one more thing people hammer 0 to skip past or scroll past to talk to a human or find the right answer.

But it has to be clearly presented. Consumer law and defamation law has different requirements on disclaimers

“We don’t know how it works but released it anyway” is a perfectly good reason to be sued when you release a product that causes harm.

The crypto crash? Idk if you’ve looked at Crypto recently lmao

Current froth doesn’t erase the previous crash. It’s clearly just a tulip bulb. Even tulip bulbs were able to be traded as currency for houses and large purchases during tulip mania. How much does a great tulip bulb cost now?

67k, only barely away from it’s ATH

67k what? In USD right? Tell us when buttcoin has its own value.

That bubble is gonna burst eventually. Bitcoin is only useful for buying monero, which is only useful for buying drugs on the internet. It’s massively overvalued. Once all of the big players have divested it’ll go down pretty fast. If you’re invested in crypto you should pull your money out of it, I really don’t think it’ll last much longer.

People been saying that for 10+ years lmao, how about we’ll see what happens.

Sure, and Bernie Madoff’s ponzi scheme ran for almost two decades. It’s your money man, do what you want, but if you ask me, there’s gonna be a lot of unfortunate, hard-working folk holding the bag when people realise that the entire thing is basically worthless

iirc alaska airlines had to pay

That was their own AI. If CVS’ AI claimed a recall, it could be a problem.

So will the google AI be held responsible for defaming CVS?

Spoiler alert- they won’t.

I would love if lawsuits brought the shit that is ai down. It has a few uses to be sure but overall it’s crap for 90+% of what it’s used for.

🎶 Tell me lies, tell me sweet little lies 🎶

it’s probably going to be doing that