“The real benchmark is: the world growing at 10 percent,” he added. “Suddenly productivity goes up and the economy is growing at a faster rate. When that happens, we’ll be fine as an industry.”

Needless to say, we haven’t seen anything like that yet. OpenAI’s top AI agent — the tech that people like OpenAI CEO Sam Altman say is poised to upend the economy — still moves at a snail’s pace and requires constant supervision.

microsoft rn:

✋ AI

👉 quantum

can’t wait to have to explain the difference between asymmetric-key and symmetric-key cryptography to my friends!

Forgive my ignorance. Is there no known quantum-safe symmetric-key encryption algorithm?

i’m not an expert by any means, but from what i understand, most symmetric key and hashing cryptography will probably be fine, but asymmetric-key cryptography will be where the problems are. lots of stuff uses asymmetric-key cryptography, like https for example.

Oh that’s not good. i thought TLS was quantum safe already

R&D is always a money sink

Especially when the product is garbage lmao

It isn’t R&D anymore if you’re actively marketing it.

Uh… Used to be, and should be. But the entire industry has embraced treating production as test now. We sell alpha release games as mainstream releases. Microsoft fired QC long ago. They push out world breaking updates every other month.

And people have forked over their money with smiles.

Microsoft fired QC long ago.

I can’t wait until my cousin learns about this, he’ll be so surprised.

I’d tell him but he’s at work. At Microsoft, in quality control.

Make sure to also tell him he’s doing a shit job!

He’s probably been fired long ago, but due to non-existant QC, he was never notified.

Milton?

Sarah?

Is he saying it’s just LLMs that are generating no value?

I wish reporters could be more specific with their terminology. They just add to the confusion.

It is fun to generate some stupid images a few times, but you can’t trust that “AI” crap with anything serious.

I was just talking about this with someone the other day. While it’s truly remarkable what AI can do, its margin for error is just too big for most if not all of the use cases companies want to use it for.

For example, I use the Hoarder app which is a site bookmarking program, and when I save any given site, it feeds the text into a local Ollama model which summarizes it, conjures up some tags, and applies the tags to it. This is useful for me, and if it generates a few extra tags that aren’t useful, it doesn’t really disrupt my workflow at all. So this is a net benefit for me, but this use case will not be earning these corps any amount of profit.

On the other end, you have Googles Gemini that now gives you an AI generated answer to your queries. The point of this is to aggregate data from several sources within the search results and return it to you, saving you the time of having to look through several search results yourself. And like 90% of the time it actually does a great job. The problem with this is the goal, which is to save you from having to check individual sources, and its reliability rate. If I google 100 things and Gemini correctly answers 99 of those things accurate abut completely hallucinates the 100th, then that means that all 100 times I have to check its sources and verify that what it said was correct. Which means I’m now back to just… you know… looking through the search results one by one like I would have anyway without the AI.

So while AI is far from useless, it can’t now and never will be able to be relied on for anything important, and that’s where the money to be made is.

Even your manual search results may have you find incorrect sources, selection bias for what you want to see, heck even AI generated slop, so the AI generated results will just be another layer on top. Link aggregating search engines are slowly becoming useless at this rate.

While that’s true, the thing that stuck out to me is not even that the AI was mislead by itself finding AI slop, or even somebody falsely asserting something. I googled something with a particular yea or no answer. “Does X technology use Y protocol”. The AI came back with “Yes it does, and here’s how it uses it”, and upon visiting the reference page for that answer, it was documentation for that technology where it explained very clearly that x technology does NOT use Y protocol, and then went into detail on why it doesn’t. So even when everything lines up and the answer is clear and unambiguous, the AI can give you an entirely fabricated answer.

What’s really awful is that it seems like they’ve trained these LLMs to be “helpful”, which means to say “yes” as much as possible. But, that’s the case even when the true answer is “no”.

I was searching for something recently. Most people with similar searches were trying to do X, I was trying to do Y which was different in subtle but important differences. There are tons of resources out there showing how to do X, but none showing how to do Y. The “AI” answer gave me directions for doing Y by showing me the procedure for doing X, with certain parts changed so that they match Y instead. It doesn’t work like that.

Like, imagine a recipe that not just uses sugar but that relies on key properties of sugar to work, something like caramel. Search for “how do I make caramel with stevia instead of sugar” and the AI gives you the recipe for making caramel with sugar, just with “stevia” replacing every mention of “sugar” in the original recipe. Absolutely useless, right? The correct answer would be “You can’t do that, the properties are just too different.” But, an LLM knows nothing, so it is happy just to substitute words in a recipe and be “helpful”.

Ironically, Google might be accelerating its own downfall as it tries to copy the “market”, considering LLMs are just a hole in its pocket.

That’s standard for emerging technologies. They tend to be loss leaders for quite a long period in the early years.

It’s really weird that so many people gravitate to anything even remotely critical of AI, regardless of context or even accuracy. I don’t really understand the aggressive need for so many people to see it fail.

For me personally, it’s because it’s been so aggressively shoved in my face in every context. I never asked for it, and I can’t escape it. It actively gets in my way at work (github copilot) and has already re-enabled itself at least once. I’d be much happier to just let it exist if it would do the same for me.

I just can’t see AI tools like ChatGPT ever being profitable. It’s a neat little thing that has flaws but generally works well, but I’m just putzing around in the free version. There’s no dollar amount that could be ascribed to the service that it provides that I would be willing to pay, and I think OpenAI has their sights set way too high with the talk of $200/month subscriptions for their top of the line product.

This summarizes it well: https://www.wheresyoured.at/wheres-the-money/

Wouldn’t call that a “summary”, but interesting read all the same. Thanks for the link.

Because there’s already been multiple AI bubbles (eg, ELIZA - I had a lot of conversations with FREUD running on an Apple IIe). It’s also been falsely presented as basically “AGI.”

AI models trained to help doctors recognize cancer cells - great, awesome.

AI models used as the default research tool for every subject - very very very bad. It’s also so forced - and because it’s forced, I routinely see that it has generated absolute, misleading, horseshit in response to my research queries. But your average Joe will take that on faith, your high schooler will grow up thinking that Columbus discovered Colombia or something.

I knew

YES

YES

FUCKING YES! THIS IS A WIN!

Hopefully they curtail their investments and stop wasting so much fucking power.

I think the best way I’ve heard it put is “if we absolutely have to burn down a forest, I want warp drive out of it. Not a crappy python app”

Correction, LLMs being used to automate shit doesn’t generate any value. The underlying AI technology is generating tons of value.

AlphaFold 2 has advanced biochemistry research in protein folding by multiple decades in just a couple years, taking us from 150,000 known protein structures to 200 Million in a year.

Yeah tbh, AI has been an insane helpful tool in my analysis and writing. Never would I have been able to do thoroughly investigate appropriate statisticall tests on my own. After following the sources and double checking ofcourse, but still, super helpful.

Well sure, but you’re forgetting that the federal government has pulled the rug out from under health research and therefore had made it so there is no economic value in biochemistry.

How is that a qualification on anything they said? If our knowledge of protein folding has gone up by multiples, then it has gone up by multiples, regardless of whatever funding shenanigans Trump is pulling or what effects those might eventually have. None of that detracts from the value that has already been delivered, so I don’t see how they are “forgetting” anything. At best, it’s a circumstance that may play in economically but doesn’t say anything about AI’s intrinsic value.

Was it just a facetious complaint?

OK yeah but… we can’t have nice things soooo

I think you’re confused, when you say “value”, you seem to mean progressing humanity forward. This is fundamentally flawed, you see, “value” actually refers to yacht money for billionaires. I can see why you would be confused.

Thanks. So the underlying architecture that powers LLMs has application in things besides language generation like protein folding and DNA sequencing.

alphafold is not an LLM, so no, not really

You are correct that AlphaFold is not an LLM, but they are both possible because of the same breakthrough in deep learning, the transformer and so do share similar architecture components.

And all that would not have been possible without linear algebra and calculus, and so on and so forth… Come on, the work on transformers is clearly separable from deep learning.

That’s like saying the work on rockets is clearly separable from thermodynamics.

A Large Language Model is a translator basically, all it did was bridge the gap between us speaking normally and a computer understanding what we are saying.

The actual decisions all these “AI” programs do are Machine Learning algorithms, and these algorithms have not fundamentally changed since we created them and started tweaking them in the 90s.

AI is basically a marketing term that companies jumped on to generate hype because they made it so the ML programs could talk to you, but they’re not actually intelligent in the same sense people are, at least by the definitions set by computer scientists.

What algorithm are you referring to?

The fundamental idea to use matrix multiplication plus a non linear function, the idea of deep learning i.e. back propagating derivatives and the idea of gradient descent in general, may not have changed but the actual algorithms sure have.

For example, the transformer architecture (that is utilized by most modern models) based on multi headed self attention, optimizers like adamw, the whole idea of diffusion for image generation are I would say quite disruptive.

Another point is that generative ai was always belittled in the research community, until like 2015 (subjective feeling would need meta study to confirm). The focus was mostly on classification something not much talked about today in comparison.

Wow i didn’t expect this to upset people.

When I say it hasn’t fundamentally changed from an AI perspective i mean there is no intelligence in artificial Intelligence.

There is no true understanding of self, just what we expect to hear. There is no problem solving, the step by steps the newer bots put out are still just ripped from internet search results. There is no autonomous behavior.

AI does not meet the definitions of AI, and no amount of long winded explanations of fundamentally the same approach will change that, and neither will spam downvotes.

Btw I didn’t down vote you.

Your reply begs the question which definition of AI you are using.

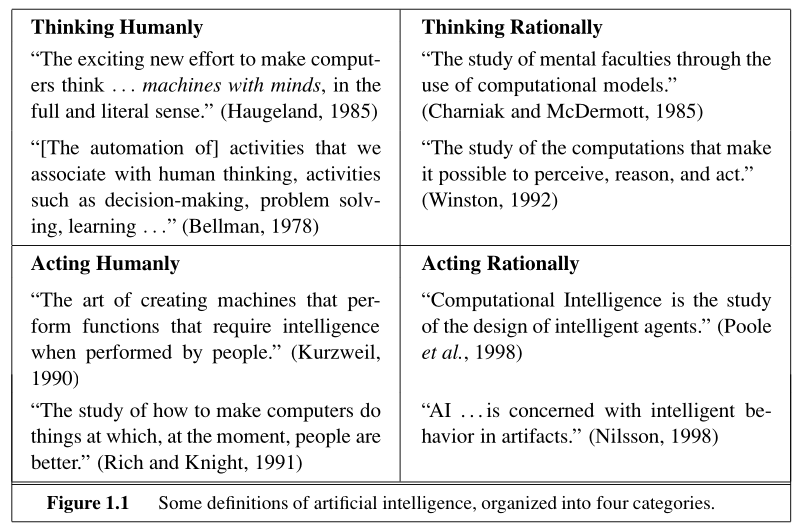

The above is from Russells and Norvigs “Artificial Intelligence: A Modern Approach” 3rd edition.

The above is from Russells and Norvigs “Artificial Intelligence: A Modern Approach” 3rd edition.I would argue that from these 8 definitions 6 apply to modern deep learning stuff. Only the category titled “Thinking Humanly” would agree with you but I personally think that these seem to be self defeating, i.e. defining AI in a way that is so dependent on humans that a machine never could have AI, which would make the word meaningless.

I’m just sick of marketing teams calling everything AI and ruining what used to be a clear goal by getting people to move the bar and compromise on what used to be rigid definitions.

I studied AI in school and am interested in it as a hobby, but these machine aren’t at the point of intelligence, despite us making them feel real.

I base my personal evaluations comparing it to an autonomous being with all the attributes I described above.

ChatGPT, and other chatbots, knows what it is because it searches the web for itself, and in fact it was programmed to repeat canned responses about itself when asked because it was saying crazy shit it was finding on the internet before.

Sam Altman and many other big names in tech have admitted that we have pretty much reached the limits of what current ML models can acheive, and we basically have to reinvent a new and more efficient method of ML to keep going.

If we were to go off Alan Turing’s last definition then many would argue even ChatGPT meets those definitions, but even he increased and refined his definition of AI over the years before he died.

Personally I don’t think we’re there yet, and by the definitons I was taught back before AI could be whatever people called it we aren’t there either. I’m trying to find who specifically made the checklist for intelligencei remember, if I do I will post it here.

I’m afraid you’re going to have to learn about AI models besides LLMs

It’s always important to double check the work of AI, but yea it excels at solving problems we’ve been using brute force on

Image recognition models are also useful for astronomy. The largest black hole jet was discovered recently, and it was done, in part, by using an AI model to sift through vast amounts of data.

https://www.youtube.com/watch?v=wC1lssgsEGY

This thing is so big, it travels between voids in the filaments of galactic super clusters and hits the next one over.

AI is just what we call automation until marketing figures out a new way to sell the tech. LLMs are generative AI, hardly useful or valuable, but new and shiny and has a party trick that tickles the human brain in a way that makes people give their money to others. Machine learning and other forms of AI have been around for longer and most have value generating applications but aren’t as fun to demonstrate so they never got the traction LLMs have gathered.

For a lot of years, computers added no measurable productivity improvements. They sure revolutionized the way things work in all segments of society for something that doesn’t increase productivity.

AI is an inflating bubble: excessive spending, unclear use case. But it won’t take long for the pop, clearing out the failures and making successful use cases clearer, the winning approaches to emerge. This is basically the definition of capitalism

What time span are you referring to when you say “for a lot of years”?

Vague memories of many articles over much of my adult life decrying the costs of whatever the current trend with computers is being higher than the benefits.

And I believe it, it’s technically true. There seems to be a pattern of bubbles where everyone jumps on the new hot thing, spend way too much money on it. It’s counterproductive, right up until the bubble pops, leaving the transformative successes.

Or I believe it was a long term thing with electronic forms and printers. As long as you were just adding steps to existing business processes, you don’t see productivity gains. It took many years for businesses to reinvent the way they worked to really see the productivity gains

If you want a reference there is a Rational Reminder Podcast (nerdy and factual personal finance podcast from a Canadian team) about this concept. It was the illustrated with trains or phone infrastructure 100 years ago : new technology looks nice -> people invest stupid amounts in a variety of projects-> some crash bring back stock valuations to reasonable level and at that point the technology is adopted and its infrastructure got subsidized by those who lost money on the stock market hot thing. Then a new hot thing emerge. The Internet got its cycle in 2000, maybe AI is the next one. Usually every few decade the top 10 in the s/p 500 changes.

And crashing the markets in the process… At the same time they came out with a bunch of mambo jumbo and scifi babble about having a million qbit quantum chip… 😂

Tech is basically trying to push up the stocks one hype idea after another. Social media bubble about to burst? AI! AI about to burst? Quantum! I’m sure that when people will start realizing quantum computing is another smokescreen, a new moronic idea will start to gain steam from all those LinkedIn “luminaries”

Quantum computation is a lot like fusion.

We know how it works and we know that it would be highly beneficial to society but, getting it to work with reliability and at scale is hard and expensive.

Sure, things get over hyped because capitalism but that doesn’t make the technology worthless… It just shows how our economic system rewards lies and misleading people for money.

It also can solve only a limited set of problems. People is under the impression that they can suddenly game at 10k full path ray tracing if they have a quantum cpu, while in reality for 99.9% of the problem is only as fast as normal cpus

That doesn’t make it worthless.

People are often wrong about technology, that’s independent of the technology’s usefulness. Quantum computation is incredibly useful for the applications that require it, things that are completely impossible to calculate with classical computers can be done using quantum algorithms.

This is true even if there are people on social media who think that it’s a new graphics card.

Absolutely, but its application is not as widespread as someone not into science might think. The only thing it might actually impact the average Joe is cryptography I think

Joseph Weizenbaum: “No shit? For realsies?”

That is not at all what he said. He said that creating some arbitrary benchmark on the level or quality of the AI, (e.g.: as it’s as smarter than a 5th grader or as intelligent as an adult) is meaningless. That the real measure is if there is value created and out out into the real world. He also mentions that global growth is up by 10%. He doesn’t provide data that correlates the grow with the use of AI and I doubt that such data exists yet. Let’s not just twist what he said to be “Microsoft CEO says AI provides no value” when that is not what he said.

AI is the immigrants of the left.

Of course he didn’t say this. By the media want you to think he did.

“They’re taking your jobs”

I think that’s pretty clear to people who get past the clickbait. Oddly enough though, if you read through what he actually said, the takeaway is basically a tacit admission, interpreted as him trying to establish a level-set on expectations from AI without directly admitting the strategy of massively investing in LLM’s is going bust and delivering no measurable value, so he can deflect with “BUT HEY CHECK OUT QUANTUM”.

Makes sense that the company that just announced their qbit advancement would be disparaging the only “advanced” thing other companies have shown in the last 5 years.

He probably saw that softbank and masayoshi son were heavily investing in it and figured it was dead.

JC Denton said it best in 2001:

I’m convinced the devs actually time traveled back from like 2035

Unlikely, all time travel technology will have been destroyed in the war, before 2035

Like all good sci-fi, they just took what was already happening to oppressed people and made it about white/American people, while adding a little misdirection by extrapolation from existing tech research. Only took about 20 years for Foucault’s boomerang to fully swing back around, and keep in mind that all the basic ideas behind LLMs had been worked out by the 80s, we just needed 40 more years of Moore’s law to make computation fast enough and data sets large enough.

Ah yes same with Boolean logic, it only took a century for Moore law to pick up, they had a small milestone along the way when the transistor was invented. All computer science was already laid out by Boole from day 1, including everything that AI already does or will ever do.

/S

Foucault’s boomerang

fun fact, that idea predates foucault by a couple decades. ironically, it was coined by a black man from Martinique. i think he called it the imperial boomerang?

That would be worrying