I know people are gonna freak out about the AI part in this.

But as a person with hearing difficulties this would be revolutionary. So much shit I usually just can’t watch because open subtitles doesn’t have any subtitles for it.

The most important part is that it’s a local

LLMmodel running on your machine. The problem with AI is less about LLMs themselves, and more about their control and application by unethical companies and governments in a world driven by profit and power. And it’s none of those things, it’s just some open source code running on your device. So that’s cool and good.Also the incessant ammounts of power/energy that they consume.

Running an llm llocally takes less power than playing a video game.

The training of the models themselves also takes a lot of power usage.

They are using open source models that have already been trained. So no extra energy is going into the models.

Of course, I mean the training of the original models that the function is dependent on. It’s not caused by VLC itself of course.

Source?

I don’t have a source for that, but the most that any locally-run program can cost in terms of power is basically the sum of a few things: maxed-out gpu usage, maxed-out cpu usage, maxed-out disk access. GPU is by far the most power-consuming of these things, and modern video games make essentially the most possible use of the GPU that they can get away with.

Running an LLM locally can at most max out usage of the GPU, putting it in the same ballpark as a video game. Typical usage of an LLM is to run it for a few seconds and then submit another query, so it’s not running 100% of the time during typical usage, unlike a video game (where it remains open and active the whole time, GPU usage dips only when you’re in a menu for instance.)

Data centers drain lots of power by running a very large number of machines at the same time.

Training the model yourself would take years on a single machine. If you factor that into your cost per query, it blows up.

The data centers are (currently) mainly used for training new models.

But if you divide the cost of training by the number of people using the model, it should be pretty low.

From what I know, local LLMs take minutes to process a single prompt, not seconds, but I guess that depends on the use case.

But also games, dunno about maxing GPU in most games. I maxed mine for crypto mining, and that was power hungry. So I would put LLMs closer to crypto than games.

Not to mention games will entertain you way more for the same time.

Obviously it depends on your GPU. A crypto mine, you’ll leave it running 24/7. On a recent macbook, an LLM will run at several tokens per second, so yeah for long responses it could take more than a minute. But most people aren’t going to be running such an LLM for hours on end. Even if they do – big deal, it’s a single GPU, that’s negligible compared to running your dishwasher, using your oven, or heating your house.

Any paper about any neural network.

Using a model to get one output is just a series of multiplications (not even that, we use vector multiplication but yeah), it’s less than or equal to rendering ONE frame in 4k games.

I know you are agreeing with me, but it being a “series of multiplications” is not terribly informative, that’s basically a given. The question is how many flops, and how efficient are flops.

They aren’t using a LLM, this is a speech to text model like Whisper.

Curious how resource intensive AI subtitle generation will be. Probably fine on some setups.

Trying to use madVR (tweaker’s video postprocessing) in the summer in my small office with an RTX 3090 was turning my office into a sauna. Next time I buy a video card it’ll be a lower tier deliberately to avoid the higher power draw lol.

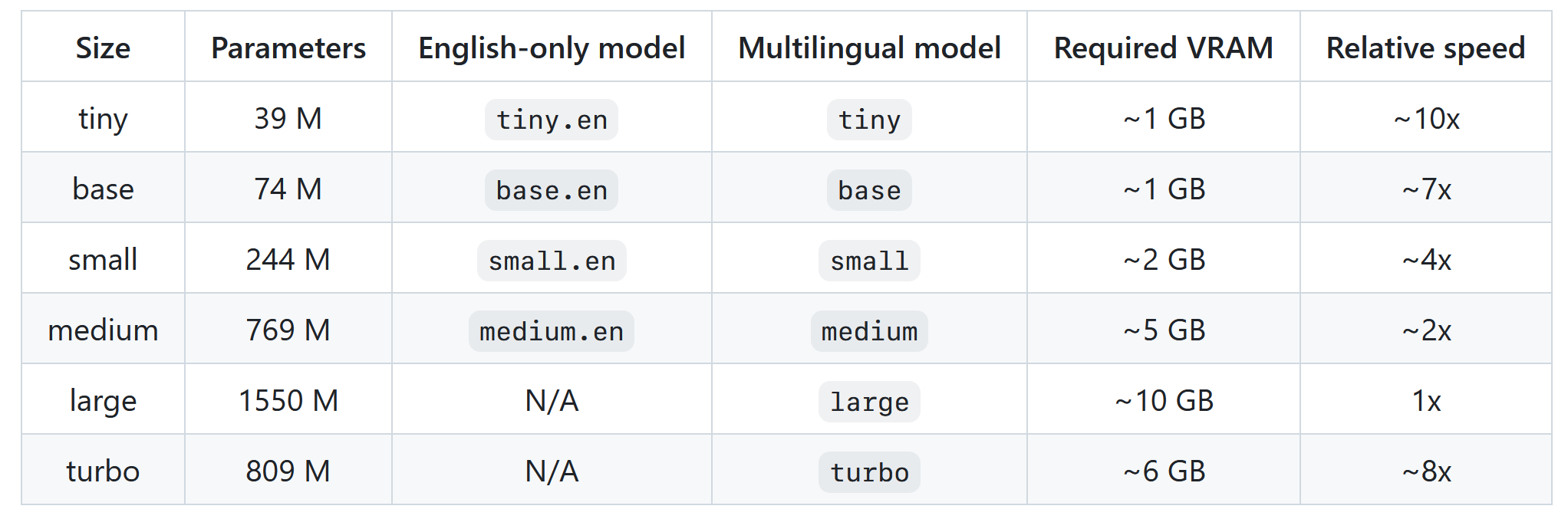

I think it really depends on how accurate you want / what language you are interpreting. https://github.com/openai/whisper has multiple variations on their model, but they all pretty much require VRAM/graphics capability (or likely NPUs as they become more commonplace).

Yeah, transcription is one of the only good uses for LLMs imo. Of course they can still produce nonsense, but bad subtitles are better none at all.

Just an important note, speech to text models aren’t LLMs, which are literally “conversational” or “text generation from other text” models. Things like https://github.com/openai/whisper are their own, separate types of models, specifically for transcription.

That being said, I totally agree, accessibility is an objectively good use for “AI”

That’s not what LLMs are, but it’s a marketing buzzword in the end I guess. What you linked is a transformer based sequence-to-sequence model, exactly the same principal as ChatGPT and all the others.

I wouldn’t say it is a good use of AI, more like one of the few barely acceptable ones. Can we accept lies and hallucinations just because the alternative is nothing at all? And how much energy/CO2 emissions should we be willing to waste on this?

Indeed, YouTube had auto generated subtitles for a while now and they are far from perfect, yet I still find it useful.

I agree that this is a nice thing, just gotta point out that there are several other good websites for subtitles. Here are the ones I use frequently:

https://subdl.com/

https://www.podnapisi.net/

https://www.subf2m.co/And if you didn’t know, there are two opensubtitles websites:

https://www.opensubtitles.com/

https://www.opensubtitles.org/Not sure if the .com one is supposed to be a more modern frontend for the .org or something but I’ve found different subtitles on them so it’s good to use both.

accessibility is honestly the first good use of ai. i hope they can find a way to make them better than youtube’s automatic captions though.

The app Be My Eyes pivoted from crowd sourced assistance to the blind, to using AI and it’s just fantastic. AI is truly helping lots of people in certain applications.

There are other good uses of AI. Medicine. Genetics. Research, even into humanities like history.

The problem always was the grifters who insist calling any program more complicated than adding two numbers AI in the first place, trying to shove random technologies into random products just to further their cancerous sales shell game.

The problem is mostly CEOs and salespeople thinking they are software engineers and scientists.

deleted by creator

I know Jeff Geerling on Youtube uses OpenAIs Whisper to generate captions for his videos instead of relying on Youtube’s. Apparently they are much better than Youtube’s being nearly flawless. I would have a guess that Google wants to minimize the compute that they use when processing videos to save money.

Spoiler: they won’t

Spoiler, they will! I use FUTO keyboard on android, it’s speech to text uses an ai model and it is amazing how great it works. The model it uses is absolutely tiny compared to what a PC could run so VLC’s implementation will likely be even better.

I also use FUTO and it’s great. But subtitles in a video are quite different than you clearly speaking into a microphone. Even just loud music will mess with a good Speech-to-text engine let alone [Explosions] and [Fighting Noises]. At the least I hope it does pick up speech well.

Still no live audio encoding without CLI (unless you stream to yourself), so no plug and play with Dolby/DTS

Encoding params still max out at 512 kpbs on every codec without CLI.

Can’t switch audio backends live (minor inconvenience, tbh)

Creates a barely usable non standard M3A format when saving a playlist.

I think that’s about my only complaints for VLC. The default subtitles are solid, especially with multiple text boxes for signs. Playback has been solid for ages. Handles lots of tracks well, and doesn’t just wrap ffmpeg so it’s very useful for testing or debugging your setup against mplayer or mpv.

Et tu, Brute?

VLC automatic subtitles generation and translation based on local and open source AI models running on your machine working offline, and supporting numerous languages!

Oh, so it’s basically like YouTube’s auto-generatedd subtitles. Never mind.

Hopefully better than YouTube’s, those are often pretty bad, especially for non-English videos.

They are terrible.

Youtube’s removal of community captions was the first time I really started to hate youtube’s management, they removed an accessibility feature for no good reason, making my experience with it significantly worse. I still haven’t found a replacement for it (at least, one that actually works)

and if you are forced to use the auto-generated ones remember no [__] swearing either! as we all know disabled people are small children who need to be coddled!

Same here. It kick-started my hatred of YouTube, and they continued to make poor decision after poor decision.

They’re awful for English videos too, IMO. Anyone with any kind of accent(read literally anyone except those with similar accents to the team that developed the auto-caption) it makes egregious errors, it’s exceptionally bad with Australian, New Zealand, English, Irish, Scottish, Southern US, and North Eastern US. I’m my experience “using” it i find it nigh unusable.

ELEVUHN

ELEVUHNTry it with videos featuring Kevin Bridges, Frankie Boyle, or Johnny Vegas

I’ve been working on something similar-ish on and off.

There are three (good) solutions involving open-source models that I came across:

- KenLM/STT

- DeepSpeech

- Vosk

Vosk has the best models. But they are large. You can’t use the gigaspeech model for example (which is useful even with non-US english) to live-generate subs on many devices, because of the memory requirements. So my guess would be, whatever VLC will provide will probably suck to an extent, because it will have to be fast/lightweight enough.

What also sets vosk-api apart is that you can ask it to provide multiple alternatives (10 is usually used).

One core idea in my tool is to combine all alternatives into one text. So suppose the model predicts text to be either “… still he …” or “… silly …”. My tool can give you “… (still he|silly) …” instead of 50/50 chancing it.

In my experiments, local Whisper models I can run locally are comparable to YouTube’s — which is to say, not production-quality but certainly better then nothing.

I’ve also had some success cleaning up the output with a modest LLM. I suspect the VLC folks could do a good job with this, though I’m put off by the mention of cloud services. Depends on how they implement it.

All hail the peak humanity levels of VLC devs.

FOSS FTW

I am still waiting for seek previews

MPC-BE

I was part of that!

I’ve been waiting for

thisbreak-free playback for a long time. Just play Dark Side of the Moon without breaks in between tracks. Surely a single thread could look ahead and see the next track doesn’t need any different codecs launched, it’s technically identical to the current track, there’s no need to have a break. /rantI know AI has some PR issues at the moment but I can’t see how this could possibly be interpreted as a net negative here.

In most cases, people will go for (manually) written subtitles rather than autogenerated ones, so the use case here would most often be in cases where there isn’t a better, human-created subbing available.

I just can’t see AI / autogenerated subtitles of any kind taking jobs from humans because they will always be worse/less accurate in some way.

Autogenerated subtitles are pretty awesome for subtitle editors I’d imagine.

even if they get the words wrong, but the timestamps right, it’d still save a lot of time

We started doing subtitling near the end of my time as an editor and I had to create the initial English ones (god forbid we give the translation company another couple hundred bucks to do it) and yeah…the timestamps are the hardest part.

I can type at 120 wpm but that’s not very helpful when you can only write a sentence at a time

Yeah this is exactly what we should want from AI. Filling in an immediate need, but also recognizing it won’t be as good as a pro translation.

I can’t see how this could possibly be interpreted as a net negative here

Not judging this as bad or good, but for sure if it’s offline generated it will bloat the size of the program.

It’s nice to see a good application of ai. I hope my low end stuff will be able to run it.

Solving problems related to accessibility is a worthy goal.

And yet they still can’t seek backwards

Iirc this is because of how they’ve optimized the file reading process; it genuinely might be more work to add efficient frame-by-frame backwards seeking than this AI subtitle feature.

That said, jfc please just add backwards seeking. It is so painful to use VLC for reviewing footage. I don’t care how “inefficient” it is, my computer can handle any operation on a 100mb file.

If you have time to read the issue thread about it, it’s infuriating. There are multiple viable suggestions that are dismissed because they don’t work in certain edge cases where it would be impossible for any method at all to work, and which they could simply fail gracefully for.

That kind of attitude in development drives me absolutely insane. See also: support for DHCPv6 in Android. There’s a thread that has been raging for I think over a decade now

I now know more about Android IPv6 than ever before

Same for simply allowing to pause on click… Luckily extension exists but it’s sad that you need one.

Perhaps we could also get a built-in AI tool for automatic subtitle synchronization?

I’m fine watching porn without subtitles

Why are you using VLC for porn? You download porn?!

my state banned pornhub so I made a big ass stash just in case, so yeah I guess. I also have a stash of music from YouTube in case they ever fully block YT-DLP, so I’m just a general data hoarder.

Land of the Free!

Now if only I could get it to play nice with my Chromecast… But I’m sure that’s on Google.

Or shitty mDNS implementations