All of the examples are commercial products. The author doesn’t know or doesn’t realize that this is a capitalist problem. Of course, there is bloat in some open source projects. But nothing like what is described in those examples.

And I don’t think you can avoid that if you’re a capitalist. You make money by adding features that maybe nobody wants. And you need to keep doing something new. Maintenance doesn’t make you any money.

So this looks like AI plus capitalism.

You make money by adding features that maybe nobody wants

So, um, who buys them?

A midlevel director who doesn’t use the tool but thinks all the features the salesperson mentioned seem cool

Sponsors maybe? Adding features because somebody influential wants them to be there. Either for money (like shovelware) or soft power (strengthening ongoing business partnerships)

Stockholders

Stockholders want the products they own stock in to have AI features so they won’t be ‘left behind’

Capitalism’s biggest lie is that people have freedom to chose what to buy. They have to buy what the ruling class sells them. When every billionaire is obsessed with chatbots, every app has a chatbot attached, and if you don’t want a chatbot, sucks to be you then, you have to pay for it anyway.

Sometimes, I feel like writers know that it’s capitalism, but they don’t want to actually call the problem what it is, for fear of scaring off people who would react badly to it. I think there’s probably a place for this kind of oblique rhetoric, but I agree with you that progress is unlikely if we continue pussyfooting around the problem

But the tooling gets bloatier too, even if it does the same. Extrem example Android apps.

“Open source” is not contradictory to “capitalist”, just involves a fair bit of industry alliances and\or freeloading.

“Open source” was literally invented to make Free software palatable to capitol.

It absolutely is to the majority of capitalists unless it still somehow directly benefits them monetarily

nonsense, software has always been crap, we just have more resources

the only significant progress will be made with rust and further formal enhancements

I’m sure someone will use rust to build a bloated reactive declarative dynamic UI framework, that wastes cycles, eats memory, and is inscrutable to debug.

I think a substantial part of the problem is the employee turnover rates in the industry. It seems to be just accepted that everyone is going to jump to another company every couple years (usually due to companies not giving adequate raises). This leads to a situation where, consciously or subconsciously, noone really gives a shit about the product. Everyone does their job (and only their job, not a hint of anything extra), but they’re not going to take on major long term projects, because they’re already one foot out the door, looking for the next job. Shitty middle management of course drastically exacerbates the issue.

I think that’s why there’s a lot of open source software that’s better than the corporate stuff. Half the time it’s just one person working on it, but they actually give a shit.

Definitely part of it. The other part is soooo many companies hire shit idiots out of college. Sure, they have a degree, but they’ve barely understood the concept of deep logic for four years in many cases, and virtually zero experience with ANY major framework or library.

Then, dumb management puts them on tasks they’re not qualified for, add on that Agile development means “don’t solve any problem you don’t have to” for some fools, and… the result is the entire industry becomes full of functionally idiots.

It’s the same problem with late-stage capitalism… Executives focus on money over longevity and the economy becomes way more tumultuous. The industry focuses way too hard on “move fast and break things” than making quality, and … here we are, discussing how the industry has become shit.

My hot take : lots of projects would benefit from a traditional project management cycle instead of trying to force Agile on every projects.

Agile SHOULD have a lot of the things ‘traditional’ management looks for! Though so many, including many college teachers I’ve heard, think of it way too strictly.

It’s just the time scale shrinks as necessary for specific deliverable goals instead of the whole product… instead of having a design for the whole thing from top to bottom, you start with a good overview and implement general arch to service what load you’ll need. Then you break down the tasks, and solve the problems more and more and yadda yadda…

IMO, the people that think Agile Development means only implement the bare minimum … are part of the complete fucking idiot portion of the industry.

Funny how agile seems to mean different things to different people.

Agile was the cool new thing years back and has been abused and misused and now, pretty much every dev company force it on their team but do whatever the fuck they want.

Agile should have a lot of traditional project management but doesn’t because it became the MBA wet dream of metrics. And when metrics become the target, people will do whatever they need to do to meet the metrics instead of actually progressing the project.

Shit idiots with enthusiasm could be trained, mentored, molded into assets for the company, by the company.

Ala an apprenticeship structure or something similar, like how you need X years before you’re a journeyman at many hands on trades.

But uh, nope, C suite could order something like that be implemented at any time.

They don’t though.

Because that would make next quarter projections not look as good.

And because that would require actual leadership.

This used to be how things largely worked in the software industry.

But, as with many other industries, now finance runs everything, and they’re trapped in a system of their own making… but its not really trapped, because… they’ll still get a golden parachute no matter what happens, everyone else suffers, so that’s fine.

Exactly. I don’t know why I’m being downvoted for describing the thing we all agree happens…

I don’t blame the students for not being seasoned professionals. I clearly blame the executives that constantly replace seasoned engineers with fresh hires they don’t have to pay as much.

Then everyone surprise pikachu faces when crap is the result… Functionally idiots is absolutely correct for the reality we’re all staring at. I am directly part of this industry, so this is more meant as honest retrospective than baseless namecalling. What happens these days is idiotry.

Yep, literal, functional idiots, as in, they keep doing easily provably as stupid things, mainly because they are too stubborn to admit they could be wrong about anything.

I used to be part of this industry, and I bailed, because the ratio of higher ups that I encountered anywhere, who were competent at their jobs vs arrogant lying assholes was about 1:9.

Corpo tech culture is fucked.

Makes me wanna chip in a little with a Johnny Silverhand solo.

Fuck man, why don’t more ethical-ish devs join to make stuff? What’s the missing link on top of easy sharing like FOSS kinda’ already has?

Obviously programming is a bit niche, but fuck… how can ethical programmers come together to survive under capitalism? Sure, profit sharing and coops aren’t bad, but something of a cultural nexus is missing in this space it feels…

Well, I’m not quite sure how to … intentionally create a cultural nexus … but I would say that having something like lemmy, piefed, the fediverse, is at least a good start.

Socializing, discussion, via a non corpo platform.

Beyond that, uh, maybe something more lile an actual syndicalist collective, or at least a union?

Yeah, a union would be great, although I feel like that would be something that would have to come quite a ways down the road of ethical devs coming together. After all, not even the FOSS community agrees on what is ethical to give away and to whom.

Maybe a union is still the right term for the abstract ‘coming together’ I’m thinking of, since it’s hard to imagine how they could go from a generic collective to a body that could actually make effective demands, but perhaps it’s roughly the same process as getting a job-wide union off the ground.

That’s “disrupting the industry” or “revolutionizing the way we do things” these days. The “move fast and break things” slogan has too much of a stink to it now.

Probably because all the dummies are finally realizing it’s a fucking stupid slogan that’s constantly being misinterpreted from what it’s supposed to mean. lol (as if the dummies even realize it has a more logical interpretation…)

Now if only they would complete the maturation process and realize all of the tech bro bullshit runs counter to good engineering or business…

True, but this is a reaction to companies discarding their employees at the drop of a hat, and only for “increasing YoY profit”.

It is a defense mechanism that has now become cultural in a huge amount of countries.

It seems to be just accepted that everyone is going to jump to another company every couple years (usually due to companies not giving adequate raises).

Well. I did the last jump because the quality was so bad.

Quality in this economy ? We need to fire some people to cut costs and use telemetry to make sure everyone that’s left uses AI to pay AI companies because our investors demand it because they invested all their money in AI and they see no return.

i think about this every time i open outlook on my phone and have to wait a full minute for it to load and hopefully not crash, versus how it worked more or less instantly on my phone ten years ago. gajillions of dollars spent on improved hardware and improved network speed and capacity, ans for what? machines that do the same thing in twice the amount of time if you’re lucky

Well obviously it has to ping 20 different servers from 5 different mega corporations!

And verify your identity three times, for good measure, to make sure you’re you and not someone that should be censored.

I wonder if this ties into our general disposability culture (throwing things away instead of repairing, etc)

Planned Obsolescence … designing things for a short lifespan so that things always break and people are always forced to buy the next thing.

It all originated with light bulbs 100 years ago … inventors did design incandescent light bulbs that could last for years but then the company owners realized it wasn’t economically feasible to produce a light bulb that could last ten years because too few people would buy light bulbs. So they conspired to engineer a light bulb with a limited life that would last long enough to please people but short enough to keep them buying light bulbs often enough.

Edison was DEFINITELY not unique or new in how he was a shithead looking for money more than inventing useful things… Like, at all.

Not the light bulbs. They improved light quality and reduced energy consumption per unit of light by increasing filament temperature, which reduced bulb life. Net win for the consumer.

You can still make an incandescent bulb last long by undervolting it orange, but it’ll be bad at illuminating, and it’ll consume almost as much electricity as when glowing yellowish white (standard).

That and also man hour costs versus hardware costs. It’s often cheaper to buy some extra ram than it is to pay someone to make the code more efficient.

Sheeeit… we haven’t been prioritizing efficiency, much less quality, for decades. You’re so right and þrowing hardware at problems. Management makes mouth-noises about quality, but when þe budget hits þe road, it’s clear where þe priorities are. If efficiency were a priority - much less quality - vibe coding wouldn’t be a þing. Low-code/no-code wouldn’t be a þing. People building applications on SAP or Salesforce wouldn’t be a þing.

Yes, if you factor in the source of disposable culture: capitalism.

“Move fast and break things” is the software equivalent of focusing solely on quarterly profits.

Accept that quality matters more than velocity. Ship slower, ship working. The cost of fixing production disasters dwarfs the cost of proper development.

This has been a struggle my entire career. Sometimes, the company listens. Sometimes they don’t. It’s a worthwhile fight but it is a systemic problem caused by management and short-term profit-seeking over healthy business growth

“Apparently there’s never the money to do it right, but somehow there’s always the money to do it twice.”

Management never likes to have this brought to their attention, especially in a Told You So tone of voice. One thinks if this bothered pointy-haired types so much, maybe they could learn from their mistakes once in a while.

We’ll just set up another retrospective meeting and have a lessons learned.

Then we won’t change anything based off the findings of the retro and lessons learned.

Post-mortems always seemed like a waste of time to me, because nobody ever went back and read that particular confluence page (especially me executives who made the same mistake again)

Post mortems are for, “Remember when we saw something similar before? What happened and how did we handle it?”

Twice? Shiiiii

Amateur numbers, lol

That applies in so many industries 😅 like you want it done right… Or do you want it done now? Now will cost you 10x long term though…

Welp now it is I guess.

You can have it fast, you can have it cheap, or you can have it good (high quality), but you can only pick two.

Getting 2 is generous sometimes.

There’s levels to it. True quality isn’t worth it, absolute garbage costs a lot though. Some level that mostly works is the sweet spot.

The sad thing is that velocity pays the bills. Quality it seems, doesn’t matter a shit, and when it does, you can just patch up the bits people noticed.

This is survivorship bias. There’s probably uncountable shitty software that never got adopted. Hell, the E.T. video game was famous for it.

I don’t make games, but fine. Baldurs Gate 3 (PS5 co-op) and Skyrim (Xbox 360) had more crashes than any games I’ve ever played.

Did that stop either of them being highly rated top selling games? No. Did it stop me enjoying them? No.

Quality feels important, but past a certain point, it really isn’t. Luck, knowing the market, maneuverability. This will get you most of the way there. Look at Fortnite. It was a wonky building game they quickly cobbled into a PUBG clone.

Accurate but ironically written by chatgpt

Is it? I didn’t get that sense. What causes you to think it’s written by chatGPT? (I ask because whilst I’m often good at discerning AI content, there are plenty of times that I don’t notice it until someone points out things that they notice that I didn’t initially)

And you can’t even zoom into the images on mobile. Maybe it’s harder than they think if they can’t even pick their blogging site without bugs

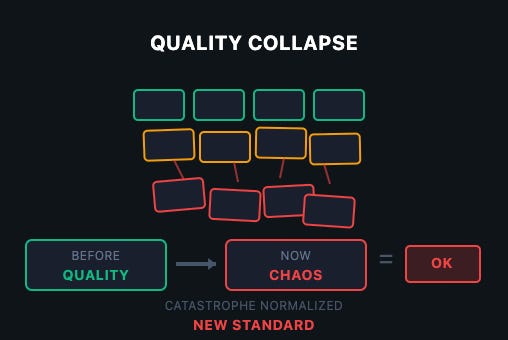

Software quality collapse

That started happening years ago.

The developers of .net should be put on trial for crimes against humanity.

What does this have to do with .NET? AFAIK all the programs mentioned in the article are written in JavaScript, except Calculator. Which is probably Swift.

You mean .NET

.net is the name of a fairly high quality web developer industry magazine from the early 2010s now sadly out of print.

Dorothy Mantooth.NET is a saint, A SAINT..NET is FOSS. You’re welcome to contribute or fork it any way you wish. If you think they are committing crimes against humanity, make a pull request.

The article is very much off point.

- Software quality wasn’t great in 2018 and then suddenly declined. Software quality has been as shit as legally possible since the dawn of (programming) time.

- The software crysis has never ended. It has only been increasing in severity.

- Ever since we have been trying to squeeze more programming performance out of software developers at the cost of performance.

The main issue is the software crisis: Hardware performance follows moore’s law, developer performance is mostly constant.

If the memory of your computer is counted in bytes without a SI-prefix and your CPU has maybe a dozen or two instructions, then it’s possible for a single human being to comprehend everything the computer is doing and to program it very close to optimally.

The same is not possible if your computer has subsystems upon subsystems and even the keyboard controller has more power and complexity than the whole apollo programs combined.

So to program exponentially more complex systems we would need exponentially more software developer budget. But since it’s really hard to scale software developers exponentially, we’ve been trying to use abstraction layers to hide complexity, to share and re-use work (no need for everyone to re-invent the templating engine) and to have clear boundries that allow for better cooperation.

That was the case way before electron already. Compiled languages started the trend, languages like Java or C# deepened it, and using modern middleware and frameworks just increased it.

OOP complains about the chain “React → Electron → Chromium → Docker → Kubernetes → VM → managed DB → API gateways”. But he doesn’t even consider that even if you run “straight on bare metal” there’s a whole stack of abstractions in between your code and the execution. Every major component inside a PC nowadays runs its own separate dedicated OS that neither the end user nor the developer of ordinary software ever sees.

But the main issue always reverts back to the software crisis. If we had infinite developer resources we could write optimal software. But we don’t so we can’t and thus we put in abstraction layers to improve ease of use for the developers, because otherwise we would never ship anything.

If you want to complain, complain to the maangers who don’t allocate enough resources and to the investors who don’t want to dump millions into the development of simple programs. And to the customers who aren’t ok with simple things but who want modern cutting edge everything in their programs.

In the end it’s sadly really the case: Memory and performance gets cheaper in an exponential fashion, while developers are still mere humans and their performance stays largely constant.

So which of these two values SHOULD we optimize for?

The real problem in regards to software quality is not abstraction layers but “business agile” (as in “business doesn’t need to make any long term plans but can cancel or change anything at any time”) and lack of QA budget.

Yeah what I hate that agile way of dealing with things. Business wants prototypes ASAP but if one is actually deemed useful, you have no budget to productisize it which means that if you don’t want to take all the blame for a crappy app, you have to invest heavily in all of the prototypes. Prototypes who are called next gen project, but gets cancelled nine times out of ten 🤷🏻♀️. Make it make sense.

This. Prototypes should never be taken as the basis of a product, that’s why you make them. To make mistakes in a cheap, discardible format, so that you don’t make these mistake when making the actual product. I can’t remember a single time though that this was what actually happened.

They just label the prototype an MVP and suddenly it’s the basis of a new 20 year run time project.

In my current job, they keep switching around everything all the time. Got a new product, super urgent, super high-profile, highest priority, crunch time to get it out in time, and two weeks before launch it gets cancelled without further information. Because we are agile.

I agree with the general idea of the article, but there are a few wild takes that kind of discredit it, in my opinion.

“Imagine the calculator app leaking 32GB of RAM, more than older computers had in total” - well yes, the memory leak went on to waste 100% of the machine’s RAM. You can’t leak 32GB of RAM on a 512MB machine. Correct, but hardly mind-bending.

“But VSCodium is even worse, leaking 96GB of RAM” - again, 100% of available RAM. This starts to look like a bad faith effort to throw big numbers around. “Also this AI ‘panicked’, ‘lied’ and later ‘admitted it had a catastrophic failure’” - no it fucking didn’t, it’s a text prediction model, it cannot panic, lie or admit something, it just tells you what you statistically most want to hear. It’s not like the language model, if left alone, would have sent an email a week later to say it was really sorry for this mistake it made and felt like it had to own it.You can’t leak 32GB of RAM on a 512MB machine.

32gb swap file or crash. Fair enough point that you want to restart computer anyway even if you have 128gb+ ram. But calculator taking 2 years off of your SSD’s life is not the best.

It’s a bug and of course it needs to be fixed. But the point was that a memory leak leaks memory until it’s out of memory or the process is killed. So saying “It leaked 32GB of memory” is pointless.

It’s like claiming that a puncture on a road bike is especially bad because it leaks 8 bar of pressure instead of the 3 bar of pressure a leak on a mountain bike might leak, when in fact both punctures just leak all the pressure in the tire and in the end you have a bike you can’t use until you fixed the puncture.

we would need exponentially more software developer budget.

Are you crazy? Profit goes to shareholders, not to invest in the project. Get real.

THANK YOU.

I migrated services from LXC to kubernetes. One of these services has been exhibiting concerning memory footprint issues. Everyone immediately went “REEEEEEEE KUBERNETES BAD EVERYTHING WAS FINE BEFORE WHAT IS ALL THIS ABSTRACTION >:(((((”.

I just spent three months doing optimization work. For memory/resource leaks in that old C codebase. Kubernetes didn’t have fuck-all to do with any of those (which is obvious to literally anyone who has any clue how containerization works under the hood). The codebase just had very old-fashioned manual memory management leaks as well as a weird interaction between jemalloc and RHEL’s default kernel settings.

The only reason I spent all that time optimizing and we aren’t just throwing more RAM at the problem? Due to incredible levels of incompetence business-side I’ll spare you the details of, our 30 day growth predictions have error bars so many orders of magnitude wide that we are stuck in a stupid loop of “won’t order hardware we probably won’t need but if we do get a best-case user influx the lead time on new hardware is too long to get you the RAM we need”. Basically the virtual price of RAM is super high because the suits keep pinky-promising that we’ll get a bunch of users soon but are also constantly wrong about that.

The software crysis has never ended

MAXIMUM ARMOR

Shit, my GPU is about to melt!

“AI just weaponized existing incompetence.”

Daamn. Harsh but hard to argue with.

Weaponized? Probably not. Amplified? ABSOLUTELY!

It’s like taping a knife to a crab. Redundant and clumsy, yet strangely intimidating

Love that video. Although it wasn’t taped on. The crab was full on about to stab a mofo

Yeah, crabby boi fully had stabbin’ on his mind.

That’s been going on for a lot longer. We’ve replaced systems running on a single computer less powerfull than my phone but that could switch screens in the blink of an eye and update its information several times per second with the new systems running on several servers with all the latest gadgets, but taking ten seconds to switch screens and updates information every second at best. Yeah, those layers of abstraction start adding up over the years.

These aren’t feature requirements. They’re memory leaks that nobody bothered to fix.

Yet all those examples have been fixed 🤣. Most of them are from 3-5 years ago and were fixed not long after being reported.

Software development is hard - that’s why not everyone can do it. You can do everything perfectly in your development, testing, and deployment, and there will still be tonnes of people that get issues if enough people use your program because not everyone’s machines are the same, not everyone’s OS is the same, etc. If you’ve ever run one of those “debloat windows” type programs, for example, your OS is probably fucked beyond belief and any problem you encounter will be due to that.

Big programs are updated almost constantly - some daily even! As development gets more and more advanced with more and more features and more and more platforms, it doesn’t get easier. What matters is if the problems get fixed, and these days you basically wait 24 hours max for a fix.

You can do everything perfectly in your development, testing, and deployment, and there will still be tonnes of people that get issues if enough people use your program because not everyone’s machines are the same, not everyone’s OS is the same, etc.

Then you didn’t do it perfectly did you?

Works on my machine is no excuse.“Perfectly” is a strong word, so I wouldn’t subscribe to this.

But it’s impossible to test software on all setups without installing it to them. There’s endless variation, so all you can do is to test on a good portion of setups and then you have to release and some setups will still have problems.

The only way to guarantee that it works on every customer’s device is by declaring every customer’s device as a beta test environment, and people don’t seem to like that either.

Who said “works on my machine”? Not me. You can test it on a hundred different machines and OS versions and it’s flawless on them all, and you’ll still get people having errors on their machines. I feel like you must have thought you found a “gotcha!” and just stopped reading my comment, because I explained why.

Anyone else remember a few years ago when companies got rid of all their QA people because something something functional testing? Yeah.

The uncontrolled growth in abstractions is also very real and very damaging, and now that companies are addicted to the pace of feature delivery this whole slipshod situation has made normal they can’t give it up.

I must have missed that one

That was M$, not an industry thing.

It was not just MS. There were those who followed that lead and announced that it was an industry thing.

Yeah, my favorite is when they figure out what features people are willing to pay for and then paywal everything that makes an app useful.

And after they monetize that fully and realize that the money is not endless, they switch to a subscription model. So that they can have you pay for your depreciating crappy software forever.

But at least you know it kind of works while you’re paying for it. It takes way too much effort to find some other unknown piece of software for the same function, and it is usually performs worse than what you had until the developers figure out how to make the features work again before putting it behind a paywall and subscription model again again.

But along the way, everyone gets to be miserable from the users to the developers and the project managers. Everyone except of course, the shareholders Because they get to make money, no matter how crappy their product, which they don’t use anyway, becomes.

A great recent example of this is Plex. It used to be open source and free, then it got more popular and started developing other features, and I asked people to pay reasonable amount for them.

After it got more popular and easy to use and set up, they started jacking up the prices, removing features and forcing people to buy subscriptions.

Your alternative now is to go back to a less fully featured more difficult to set up but open source alternative and something like Jellyfin. Except that most people won’t know how to set it up, there are way less devices and TVs will support their software, and you can’t get it to work easily for your technologically illiterate family and or friends.

So again, Your choices are stay with a crappy commercialized money-grubbing subscription based product that at least works and is fully featured for now until they decide to stop. Or, get a new, less developed, more difficult to set up, highly technical, and less supported product that’s open source and hope that it doesn’t fall into the same pitfalls as its user base and popularity grows.