Prompt: list the next friday febuary 9ths occurring on leap years

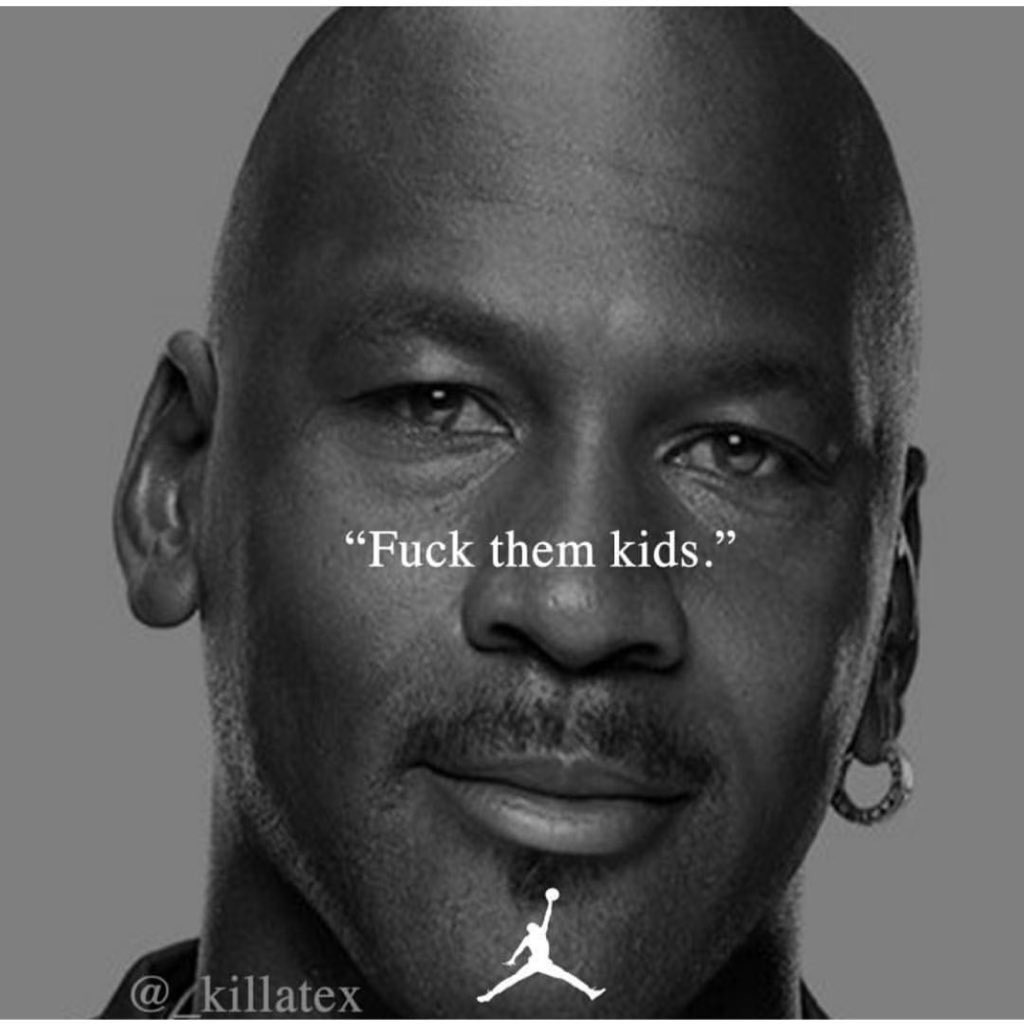

Response: Unfortunately, there is no such thing as a Friday, February 9th occurring on a leap year. Here’s why:

• February only has 28 days in a typical year and 29 days in a leap year.

• Since a month consists of 4 weeks (28 days), the day of the week for any particular date repeats every 4 years.

• This means that if February 9th falls on a Friday in a non-leap year, it will fall on a Monday in the next year, Tuesday in the year after that, Wednesday in the third year, and back to Friday in the fourth year.

• However, since the fourth year is a leap year with an extra day in February, February 9th “shifts” by one day, falling on a Saturday instead of Friday. Therefore, it’s impossible for February 9th to be a Friday in a leap year. The next Friday, February 9th will occur in 2029, but it will be a common year, not a leap year.

I could teach a college course on the amount of incorrect information in this response.

some other winners from Gemini and ChatGPT

Copilot nailed it

did you know people born on leap years only emerge and exist on leap years?

They’re part cicada though, so there’s that.

That explains so much.

That would be actually a good concept for a scifi or romantic movie: “The Leaper”

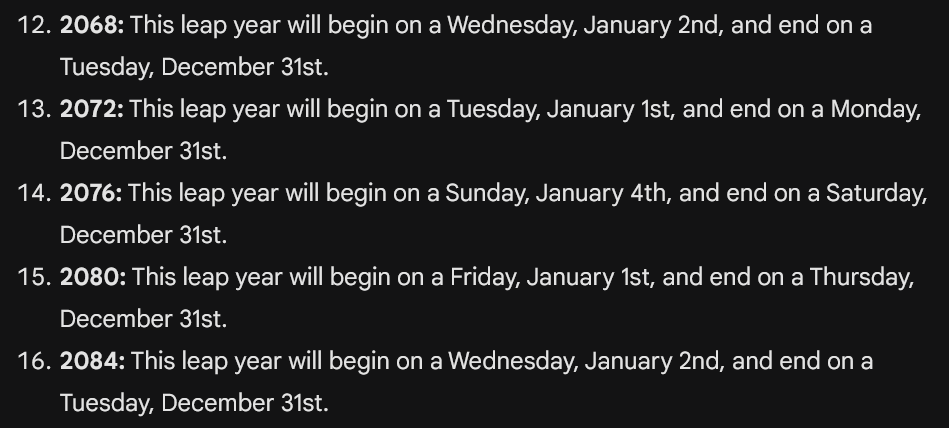

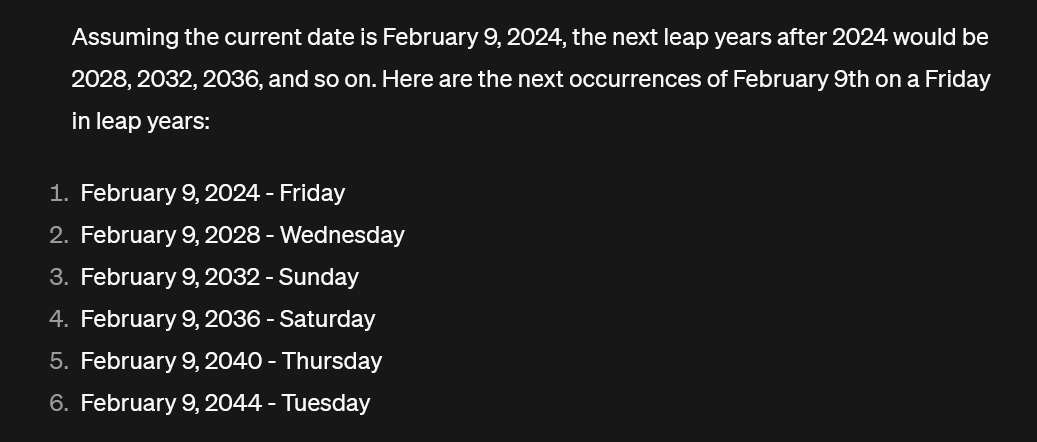

Gemini Advanced (Ultra) gets it right:

Saying please to an AI is funny to me. But I guess I will be the first to die in the robot uprising.

Or maybe saying please got you the correct answer and it’s a lesson to always use your manners!

Just because it’s not human doesn’t mean I can’t show some basic manners. 😅

How is it performing overall? Would you use it over chatgpt? Does it integrate with the rest of my Google account?

It seems more expressive than GPT-4 in general and better at itemizing information, but it’s a bit worse at keeping track of context and seems to hallucinate more than GPT-4 (but less than GPT-3). I’m currently on the free trial period so I’ll try it out for these two months and then see if it justifies switching over (the subscription is the same price so that won’t be the deal-breaker). One thing that it’s noticeably better at is researching online, probably because it uses Google as a backend instead of Bing lol.

It’s supposed to integrate with other services, if you use the Android/iOS app, as it can replace Google Assistant entirely and get access to info from other Google services. However, the app is unavailable in the EU and a few other countries as of yet, along with image generation. Classic case of EU favoritism towards Microsoft and not sanctioning it like it does Apple and Google… It’s also rumored that the ChatGPT Android app will get Assistant API integration “soon”, but it’s also not yet available (anywhere, AFAIK). Presumably, even if that is the case, Google can probably add in deeper system-level integrations, given how it’s their OS and their ecossystem.

I have experienced the same differences you mention. I would like to add that it’s a lot faster than GPT4.

EU favoritism of Microsoft? The same EU that fined Internet Explorer basically out of existence?

If anything it’s Microsofts much savvier handling of their AI policies that make them less of a target. Copilot is very clear that they aren’t entitled to use your data to train the base model, which is in stark contrast to OpenAIs agreement and Googles which basically say that anything entered into them is fair game for them to use to train.

Based on experience from the inception of LLMs and running my own locally since, I’m convinced free llm access from anyone, openai or Google, are deliberately false and incorrect with information. While the paid models aren’t. Just another drive towards enshittified consumer capitalism.

As if they have fine controls over these models… Anyone who’s been playing with language models have seen this false confidence problem, and the way they’re “solving” it is by training larger models.

They don’t need to enshittify their lower models when they’re already bad. Not everything that doesn’t work as you expect is enshittification. If we could stop overusing this word and focus it on the actual things that deteriorate over time, that’d be great.

Free access are probably the sub billion size, or very limited in CPU which amounts to the same.

And yes why give people the fancy model for free, they can use some random model from openai

I asked the same question to regular Gemini and got a seemingly correct answer as well.

Just gonna leave this here