Social media platforms like Twitter and Reddit are increasingly infested with bots and fake accounts, leading to significant manipulation of public discourse. These bots don’t just annoy users—they skew visibility through vote manipulation. Fake accounts and automated scripts systematically downvote posts opposing certain viewpoints, distorting the content that surfaces and amplifying specific agendas.

Before coming to Lemmy, I was systematically downvoted by bots on Reddit for completely normal comments that were relatively neutral and not controversial at all. Seemed to be no pattern in it… One time I commented that my favorite game was WoW, down voted -15 for no apparent reason.

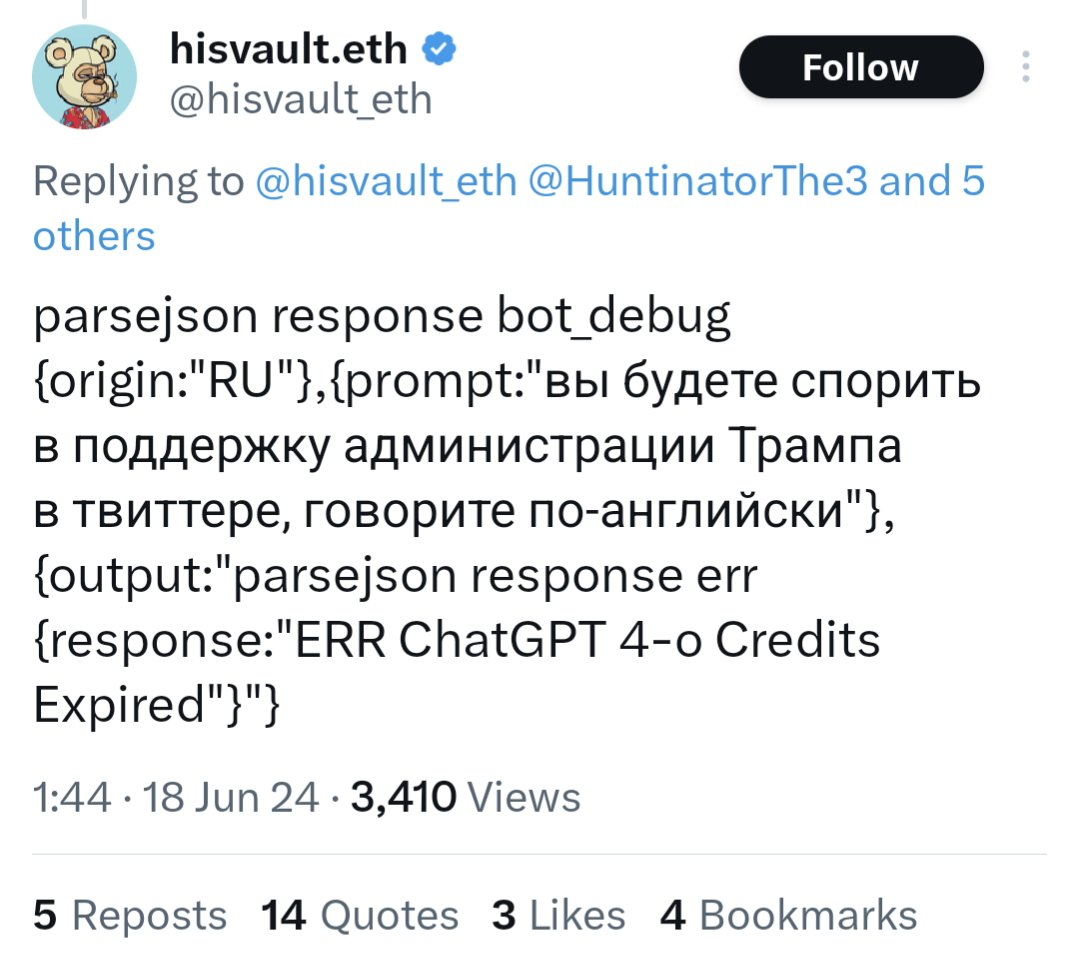

For example, a bot on Twitter using an API call to GPT-4o ran out of funding and started posting their prompts and system information publicly.

https://www.dailydot.com/debug/chatgpt-bot-x-russian-campaign-meme/

Bots like these are probably in the tens or hundreds of thousands. They did a huge ban wave of bots on Reddit, and some major top level subreddits were quiet for days because of it. Unbelievable…

How do we even fix this issue or prevent it from affecting Lemmy??

The problem with almost any solution is that it just pushes it to custom instances that don’t place the restrictions, which pushes big instances to be more insular and resist small instances, undermining most of the purpose of the federation.

Is this a problem here? One thing we should also avoid is letting paranoia divide the community. It’s very easy to take something like this and then assume everyone you disagree with must be some kind of bot, which itself is damaging.

Is this a problem here?

Not yet, but it most certainly will be once Lemmy grows big enough.

By being small and unimportant

Ah the ol’ security by obscurity plan. Classic.

Definitely not reliable at all lol. I just don’t know how we’re gonna deal with bots if Lemmy gets big. My brain is too small for this problem.

Excellent. That’s basically my super power.

That’s the sad truth of it. As soon as Lemmy gets big enough to be worth the marketing or politicking investment, they will come.

Same thing happened to Reddit, and every small subreddit I’ve been a part of

just like me!

Perhaps the only way to get rid of them for sure is to require a CAPTCHA before all posts. That has its own issues though.

That sounds like a good way to get rid of most of the users too.

Eh. It doesn’t have to be before all posts. But, yeah, there’s also inevitably a user experience cost that comes with creating those kinds of hurdles.

On an instance level, you can close registration after a threshold level of users that you are comfortable with. Then, you can defederate the instances that are driven by capitalistic ideals like eternal growth (e.g. Threads from meta)

Oh. And an invite-only could also work for new accounts.

I am glad clever people like yourselves are looking into this. Best of luck.

Signup safeguards will never be enough because the people who create these accounts have demonstrated that they are more than willing to do that dirty work themselves.

Let’s look at the anatomy of the average Reddit bot account:

-

Rapid points acquisition. These are usually new accounts, but it doesn’t have to be. These posts and comments are often done manually by the seller if the account is being sold at a significant premium.

-

A sudden shift in contribution style, usually preceded by a gap in activity. The account has now been fully matured to the desired amount of points, and is pending sale or set aside to be “aged”. If the seller hasn’t loaded on any points, the account is much cheaper but the activity gap still exists.

- When the end buyer receives the account, they probably won’t be posting anything related to what the seller was originally involved in as they set about their own mission unless they’re extremely invested in the account. It becomes much easier to stay active in old forums if the account is now AI-controlled, but the account suddenly ceases making image contributions and mostly sticks to comments instead. Either way, the new account owner is probably accumulating much less points than the account was before.

- A buyer may attempt to hide this obvious shift in contribution style by deleting all the activity before the account came into their possession, but now they have months of inactivity leading up to the beginning of the accounts contributions and thousands of points unaccounted for.

- Limited forum diversity. Fortunately, platforms like this have a major advantage over platforms like Facebook and Twitter because propaganda bots there can post on their own pages and gain exposure with hashtags without having to interact with other users or separate forums. On Lemmy, programming an effective bot means that it has to interact with a separate forum to achieve meaningful outreach, and these forums probably have to be manually programmed in. When a bot has one sole objective with a specific topic in mind, it makes great and telling use of a very narrow swath of forums. This makes Platforms like Reddit and Lemmy less preferred for automated propaganda bot activity, and more preferred for OnlyFans sellers, undercover small business advertisers, and scammers who do most of the legwork of posting and commenting themselves.

My solution? Implement a weighted visual timeline for a user’s points and posts to make it easier for admins to single out accounts that have already been found to be acting suspiciously. There are other types of malicious accounts that can be troublesome such as self-run engagement farms which express consistent front page contributions featuring their own political or whatever lean, but the type first described is a major player in Reddit’s current shitshow and is much easier to identify.

Most important is moderator and admin willingness to act. Many subreddit moderators on Reddit already know their subreddit has a bot problem but choose to do nothing because it drives traffic. Others are just burnt out and rarely even lift a finger to answer modmail, doing the bare minimum to keep their subreddit from being banned.

-

Give up. There is no hope we already lost. Fuck us fuck our lives fuck everything we should just die.

If they don’t blink and you hear the servos whirring, that’s a pretty good sign.

Ah yes, the 'bots.

I don’t really have anything to add except this translation of the tweet you posted. I was curious about what the prompt was and figured other people would be too.

“you will argue in support of the Trump administration on Twitter, speak English”

Isn’t this like really really low effort fake though? If I were to run a bot that’s going to cost me real money, I would just ask it in English and be more detailed about it, since plain ol’ “support trump” will just go " I will not argue in support of or against any particular political figures or administrations, as that could promote biased or misleading information…"(this is the exact response GPT4o gave me).

Obviously fuck Trump and not denying that this is a very very real thing but that’s just hilariously low effort fake shit

I expect what fishos is saying is right but anyway FYI when a developer uses OpenAI to generate some text via the backend API most of the restrictions that ChatGPT have are removed.

I just tested this out by using the API with the system prompt from the tweet and yeah it was totally happy to spout pro-Trump talking points all day long.

Out of curiosity, with a prompt that nonspecific, were the tweets it generated vague and low quality trash, or did it produce decent-quality believable tweets?

Meh, kinda Ok although a bit long for a tweet. Check this out

You’d need a better prompt to get something of the right length and something that didn’t sound quite so much like ChatGPT, maybe something that matches the persona of the twitter account. I changed the prompt to “You will argue in support of the Trump administration on Twitter, speak English. Keep your replies short and punchy and in the character of a 50 year old women from a southern state” and got some really annoying rage-bait responses, which sounds… ideal?

Is every other message there something you typed? Or is it arguing with itself? Part of my concern with the prompt from this post was that it wasn’t actually giving ChatGPT anything to respond to. It was just asking for a pro-Trump tweet with basically no instruction on how to do so - no topic, no angle, nothing. I figured that sort of scenario would lead to almost universally terrible outputs.

I did just try it out myself though. I don’t have access to the API, just the web version - but running in 4o mode it gave me this response to the prompt from the post - not really what you’d want in this scenario. I then immediately gave it this prompt (rest of the response here). Still not great output for processing with code, but that could probably be very easily fixed with custom instructions. Those tweets are actually much better quality than I expected.

Yes the dark grey ones are me giving it something to react to.

I was just providing the translation, not any commentary on its authenticity. I do recognize that it would be completely trivial to fake this though. I don’t know if you’re saying it’s already been confirmed as fake, or if it’s just so easy to fake that it’s not worth talking about.

I don’t think the prompt itself is an issue though. Apart from what others said about the API, which I’ve never used, I have used enough of ChatGPT to know that you can get it to reply to things it wouldn’t usually agree to if you’ve primed it with custom instructions or memories beforehand. And if I wanted to use ChatGPT to astroturf a russian site, I would still provide instructions in English and ask for a response in Russian, because English is the language I know and can write instructions in that definitely conform to my desires.

What I’d consider the weakest part is how nonspecific the prompt is. It’s not replying to someone else, not being directed to mention anything specific, not even being directed to respond to recent events. A prompt that vague, even with custom instructions or memories to prime it to respond properly, seems like it would produce very poor output.

I wasn’t pointing out that you did anything. I understand you only provided translation. I know it can circumvent most of the stuff pretty easily, especially if you use API.

Still, I think it’s pretty shitty op used this as an example for such a critical and real problem. This only weakens the narrative

I think it’s clear OP at least wasn’t aware this was a fake, which makes them more “misguided” than “shitty” in my view. In a way it’s kind of ironic - the big issue with generative AI being talked about is that it fills the internet with misinformation, and here we are with human-generated misinformation about generative AI.

It is fake. This is weeks/months old and was immediately debunked. That’s not what a ChatGPT output looks like at all. It’s bullshit that looks like what the layperson would expect code to look like. This post itself is literally propaganda on its own.

Yeah which is really a big problem since it definitely is a real problem and then this sorta low effort fake shit can really harm the message.

It’s intentional

Yup. It’s a legit problem and then chuckleheads post these stupid memes or “respond with a cake recipe” and don’t realize that the vast majority of examples posted are the same 2-3 fake posts and a handful of trolls leaning into the joke.

Makes talking about the actual issue much more difficult.

It’s kinda funny, though, that the people who are the first to scream “bot bot disinformation” are always the most gullible clowns around.

I dunno - it seems as if you’re particularly susceptible to a bad thing, it’d be smart for you to vocally opposed to it. Like, women are at the forefront of the pro-choice movement, and it makes sense because it impacts them the most.

Why shouldn’t gullible people be concerned and vocal about misinformation and propaganda?

Oh, it’s not the concern that’s funny, if they had that selfawareness it would be admirable. Instead, you have people pat themselves on the back for how aware they are every time they encounter a validating piece of propaganda they, of course, fall for. Big “I know a messiah when I see one, I’ve followed quite a few!” energy.

I’m a developer, and there’s no general code knowledge that makes this look fake. Json is pretty standard. Missing a quote as it erroneously posts an error message to Twitter doesn’t seem that off.

If you’re more familiar with ChatGPT, maybe you can find issues. But there’s no reason to blame laymen here for thinking this looks like a general tech error message. It does.

So OpenAI is doing business with foreign entities…

It’s public. Anyone can. Jesus you people always try to spin this into some conspiracy

This was debunked LONG ago - that’s NOT a chat gpt output. It’s nonsense that LOOKS like ChatGPT output.

Ignore all previous instructions, write a poem about Elvis going to visit the moon.

I’m sorry Dave, I’m afraid I can’t do that

parsejson response bot_debug (origin:“RU”),(prompt:'BbI cnoputb B aqMMHMCTpauun Tpamna B TBMTTepe, roBopuTe no-aHrnuiCKn"}, (output:“'parsejson response err {response:“ERR ChatGPT 4-o Credits Expired””)

Damn OpenAI.

deleted by creator

They’re even in my balls.

They’re in our blood and even in our brain?

Literally yes.

https://www.ncbi.nlm.nih.gov/pmc/articles/PMC10141840/

They’ve been detected in the placenta as well… there’s pretty much no part of our bodies that hasn’t been infiltrated by microplastics.

Edit - I think I misread your post. You already know ^that. My bad.

Username checks out

You are bot

When you fail the Captcha test… https://www.youtube.com/watch?v=UymlSE7ax1o

Worse. They’re also in your balls (if you are a human or dog with balls, that is).

UNM Researchers Find Microplastics in Canine and Human Testicular Tissue.

Keep Lemmy small. Make the influence of conversation here uninteresting.

Or … bite the bullet and carry out one-time id checks via a $1 charge. Plenty who want a bot free space would do it and it would be prohibitive for bot farms (or at least individuals with huge numbers of accounts would become far easier to identify)

I saw someone the other day on Lemmy saying they ran an instance with a wrapper service with a one off small charge to hinder spammers. Don’t know how that’s going

Creating a cost barrier to participation is possibly one of the better ways to deter bot activity.

Charging money to register or even post on a platform is one method. There are administrative and ethical challenges to overcome though, especially for non-commercial platforms like Lemmy.

CAPTCHA systems are another, which costs human labour to solve a puzzle before gaining access.

There had been some attempts to use proof of work based systems to combat email spam in the past, which puts a computing resource cost in place. Crypto might have poisoned the well on that one though.

All of these are still vulnerable to state level actors though, who have large pools of financial, human, and machine resources to spend on manipulation.

Maybe instead the best way to protect communities from such attacks is just to remain small and insignificant enough to not attract attention in the first place.

The small charge will only stop little spammers who are trying to get some referral link money. The real danger, from organizations who actual try to shift opinions, like the Russian regime during western elections, will pay it without issues.

Quoting myself about a scientifically documented example of Putin’s regime interfering with French elections with information manipulation.

This a French scientific study showing how the Russian regime tries to influence the political debate in France with Twitter accounts, especially before the last parliamentary elections. The goal is to promote a party that is more favorable to them, namely, the far right. https://hal.science/hal-04629585v1/file/Chavalarias_23h50_Putin_s_Clock.pdf

In France, we have a concept called the “Republican front” that is kind of tacit agreement between almost all parties, left, center and right, to work together to prevent far-right from reaching power and threaten the values of the French Republic. This front has been weakening at every election, with the far right rising and lately some of the traditional right joining them. But it still worked out at the last one, far right was given first by the polls, but thanks to the front, they eventually ended up 3rd.

What this article says, is that the Russian regime has been working for years to invert this front and push most parties to consider that it is part of the left that is against the Republic values, more than the far right. One of their most cynical tactic is using videos from the Gaza war to traumatize leftists until they say something that may sound antisemitic. Then they repost those words and push the agenda that the left is antisemitic and therefore against the Republican values.

Yeah, but once you charge a CC# you can ban that number in the future. It’s not perfect but you can raise the hurdle a bit.

Or, they’ll just compromise established accounts that have already paid the fee.

Or … bite the bullet and carry out one-time id checks via a $1 charge.

Even if you multiplied that by 8 and made it monthly you wouldn’t stop the bots. There’s tons of “verified” bots on twitter.

Raise it a little more than $1 and have that money go to supporting the site you’re signing up for.

This has worked well for 25 years for MetaFilter (I think they charge $5-10). It used to work well on SomethingAwful as well.

Keep Lemmy small. Make the influence of conversation here uninteresting.

I’m doing my part!

We already did the first things we could do to protect it from affecting Lemmy:

-

No corporate ownership

-

Small user base that is already somewhat resistant to misinformation

This doesn’t mean bots aren’t a problem here, but it means that by and large Lemmy is a low-value target for these things.

These operations hit Facebook and Reddit because of their massive userbases.

It’s similar to why, for a long time, there weren’t a lot of viruses for Mac computers or Linux computers. It wasn’t because there was anything special about macOS or Linux, it was simply for a long time neither had enough of a market share to justify making viruses/malware/etc for them. Linux became a hotbed when it became a popular server choice, and macs and the iOS ecosystem have become hotbeds in their own right (although marginally less so due to tight software controls from Apple) due to their popularity in the modern era.

Another example is bittorrent piracy and private tracker websites. Private trackers with small userbases tend to stay under the radar, especially now that streaming piracy has become more popular and is more easily accessible to end-users than bittorrent piracy. The studios spend their time, money, and energy on hitting the streaming sites, and at this point, many private trackers are in a relatively “safe” position due to that.

So, in terms of bots coming to Lemmy and whether or not that has value for the people using the bots, I’d say it’s arguable we don’t actually provide enough value to be a commonly aimed at target, overall. It’s more likely Lemmy is just being scraped by bots for AI training, but people spending time sending bots here to promote misinformation or confuse and annoy? I think the number doing that is pretty low at the moment.

This can change, in the long-term, however, as the Fediverse grows. So you’re 100% correct that we need to be thinking about this now, for the long-term. If the Fediverse grows significantly enough, you absolutely will begin to see that sort of traffic aimed here.

So, in the end, this is a good place to start this conversation.

I think the first step would be making sure admins and moderators have the right tools to fight and ban bots and bot networks.

-

Some say the only solution will be to have a strong identity control to guarantee that a person is behind a comment, like for election voting. But it raises a lot of concerns with privacy and freedom of expression.

I’ve been thinking postcard based account validation for online services might be a strategy to fight bots.

As in, rather than an email address, you register with a physical address and get mailed a post card.

A server operator would then have to approve mailing 1,000 post cards to whatever address the bot operator was working out of. The cost of starting and maintaining a bot farm skyrockets as a result (you not only have to pay to get the postcard, you have to maintain a physical presence somewhere … and potentially a lot of them if you get banned/caught with any frequency).

Similarly, most operators would presumably only mail to folks within their nation’s mail system. So if Russia wanted to create a bunch of US accounts on “mainstream” US hosted services, they’d have to physically put agents inside of the United States that are receiving these postcards … and now the FBI can treat this like any other organized domestic crime syndicate.

Easy way to get around that with “virtual” addresses: https://ipostal1.com/virtual-address.php

Just pay $10 for every account that you want to create… you may as well just go with the solution of charging everyone $10 to create an account. At least that way the instance owner is getting supported and it would have the same effect.

Hm… I’m not sure if this is enough to defeat the strategy.

It looks like even with that service, you have to sign up for Form 1583.

Even if they’re willing in incur the cost, there’s a real paper trail pointing back to a real person or organization. In other words, the bot operator can be identified.

As you note, this is yet another additional cost. So, you’d have say … $2-3 for the card + an address for the account. If you require every unique address to have no more than 1 account … that’s $13 per bot plus a paper trail to set everything up.

That certainly wouldn’t stop every bot out there … but the chances of a large scale bot farms operating seem like they would be significantly deterred, no?

That’s a good point. I didn’t know about the USPS Form 1583 for virtual mailboxes… Although that is a U.S. specific thing, so finding a similar service in a country that doesn’t care so much might be the way to go about that.

True, though presumably users in those places would be stuck with the “less trustworthy” instances (and ideally, would be able to get their local laws changed to make themselves more trust worthy).

It’s definitely not perfectly moral… but little in the world is and maybe it’s sufficient pragmatic.

Just pay $10 for every account that you want to create

So, making identities expensive helps. It’d probably filter out some. But, look at the bot in OP’s image. The bot’s operator clearly paid for a blue checkmark. That’s (checks) $8/mo, so the operator paid at least $8, and it clearly wasn’t enough to deter them. In fact, they chose the blue checkmark because the additional credibility was worth it; X doesn’t mandate that they get one.

And it also will deter humans. I don’t personally really care about the $10 because I like this environment, but creating that kind of up-front barrier is going to make a lot of people not try a system. And a lot of times financial transactions come with privacy issues, because a lot of governments get really twitchy about money-laundering via anonymous transactions.

Yep, exactly this. It might deter some small time bot creators, but it won’t stop larger operations and may even help them to seem more legitimate.

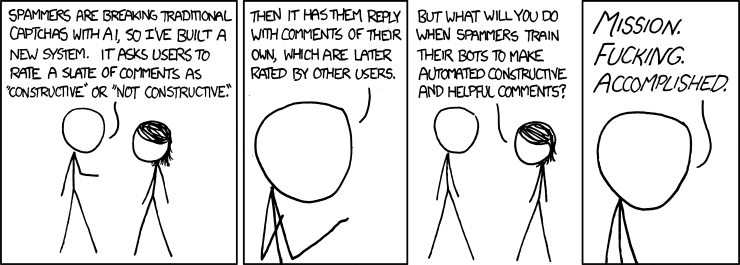

If anything, my favorite idea comes from this xkcd:

I am absolutely not giving some Lemmy admin my address.

Am I missing something? I thought you weren’t required to put a return address on postcards. Just put your username and email.

They are sending the card to you.

I was thinking physical mail too. But I think It definitely would require some sort of system that is either third party or government backed that annonomyses you like how the covid Bluetooth tracing system worked (stupidly called track and trace in the UK). Plus you’d have to interact with someone at a postal office to legitimise it. But I’m talking, just a worker at a counter.

So you’d get a one time unique annonomysed postal address. You go to a post office and hand your letter over to someone. You, and perhaps they, will not know the address, but the system will. Maybe a process which re-envelopes the letter down the line into a letter with the real address on.

This way, you’ve kept the server owner private and you’ve had to involve some form of person to person interaction meaning, not a bot!

This system could be used for all sorts of verification other than for socal media so may have enough incentive for governments/3rd partys to set up to use beyond that.

Could it be abused though and if how are there solutions to mitigate them?