Yeah. Normal whoppers are crunchy. 1 in 4 whoppers is soggy and chewy and hard to eat

Kogasa

- 0 Posts

- 120 Comments

Whoppers are good but the risk of getting a bad one is not worth it. Ech

It’s called speed of lobsters

0·3 months ago

0·3 months agoDon’t think it saves bandwidth unless it’s a DNS level block, which IT should also do but separately from uBO

0·3 months ago

0·3 months agoEddie Bauer and Carhartt are my go-tos. Both carry tons of tall sizes. Wrangler has some too and may be cheaper.

0·4 months ago

0·4 months agoI worked with Progress via an ERP that had been untouched and unsupported for almost 20 years. Damn easy to break stuff, more footguns than SQL somehow

0·4 months ago

0·4 months agoThis has nothing to do with Windows or Linux. Crowdstrike has in fact broken Linux installs in a fairly similar way before.

0·4 months ago

0·4 months agoSure, throw people in jail who haven’t committed a crime, that’ll fix all kinds of systemic issues

0·4 months ago

0·4 months agoCatch and then what? Return to what?

0·4 months ago

0·4 months agoStill not enough, or at least pi is not known to have this property. You need the number to be “normal” (or a slightly weaker property) which turns out to be hard to prove about most numbers.

0·4 months ago

0·4 months agoIt sounds like you don’t understand the complexity of the game. Despite being finite, the number of possible games is extremely large.

These things are specifically not defined by the protocol. They could be. They’re not, by design.

It doesn’t, it just delegates the responsibility to something else, namely xdg-desktop-portal and/or your compositor. The main issue with global hotkeys is that applications can’t usually set them, e.g. Discord push-to-talk, rather the compositor has to set them and the application needs to communicate with the compositor. This is fundamentally different from how it worked with X11 so naturally adoption is slow.

Bitcoin is more widely seen as a vehicle for speculation rather than a decentralized currency. Unlucky.

0·4 months ago

0·4 months agoThere’s a spectrum between architecture/planning/design and “premature optimization.” Using microservices in anticipation of a need for scaling isn’t premature optimization, it’s just design.

0·5 months ago

0·5 months agoU good?

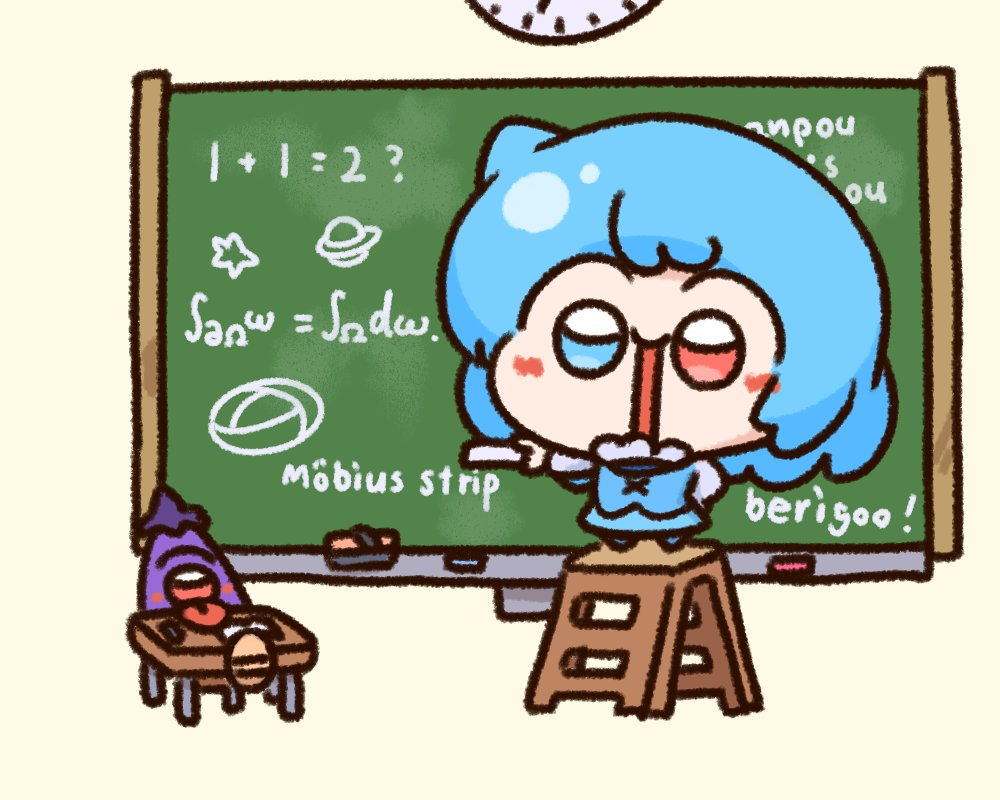

Stokes’ theorem. Almost the same thing as the high school one. It generalizes the fundamental theorem of calculus to arbitrary smooth manifolds. In the case that M is the interval [a, x] and ω is the differential 1-form f(t)dt on M, one has dω = f’(t)dt and ∂M is the oriented tuple {+x, -a}. Integrating f(t)dt over a finite set of oriented points is the same as evaluating at each point and summing, with negatively-oriented points getting a negative sign. Then Stokes’ theorem as written says that f(x) - f(a) = integral from a to x of f’(t) dt.

0·5 months ago

0·5 months agoYour first two paragraphs seem to rail against a philosophical conclusion made by the authors by virtue of carrying out the Turing test. Something like “this is evidence of machine consciousness” for example. I don’t really get the impression that any such claim was made, or that more education in epistemology would have changed anything.

In a world where GPT4 exists, the question of whether one person can be fooled by one chatbot in one conversation is long since uninteresting. The question of whether specific models can achieve statistically significant success is maybe a bit more compelling, not because it’s some kind of breakthrough but because it makes a generalized claim.

Re: your edit, Turing explicitly puts forth the imitation game scenario as a practicable proxy for the question of machine intelligence, “can machines think?”. He directly argues that this scenario is indeed a reasonable proxy for that question. His argument, as he admits, is not a strongly held conviction or rigorous argument, but “recitations tending to produce belief,” insofar as they are hard to rebut, or their rebuttals tend to be flawed. The whole paper was to poke at the apparent differences between (a futuristic) machine intelligence and human intelligence. In this way, the Turing test is indeed a measure of intelligence. It’s not to say that a machine passing the test is somehow in possession of a human-like mind or has reached a significant milestone of intelligence.

0·5 months ago

0·5 months agoI don’t think the methodology is the issue with this one. 500 people can absolutely be a legitimate sample size. Under basic assumptions about the sample being representative and the effect size being sufficiently large you do not need more than a couple hundred participants to make statistically significant observations. 54% being close to 50% doesn’t mean the result is inconclusive. With an ideal sample it means people couldn’t reliably differentiate the human from the bot, which is presumably what the researchers believed is of interest.

Paru was at one point a rewrite of yay in Rust, and has since continued development as a pseudo parallel fork. It’s good. Dunno if it’s worth switching, you’d have to see if there’s any specific features you might happen to want, but they’re both fine