- cross-posted to:

- technology@beehaw.org

- cross-posted to:

- technology@beehaw.org

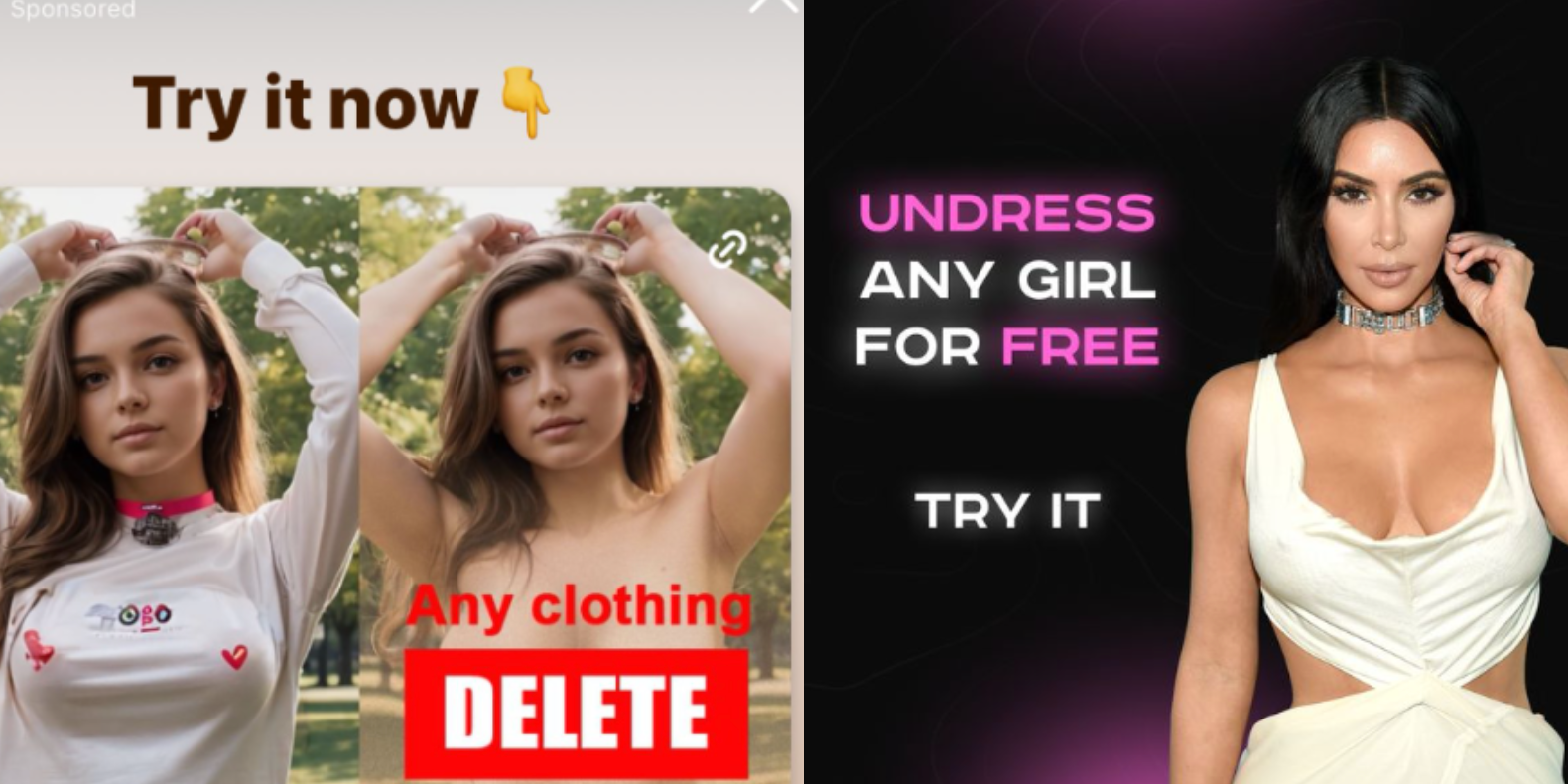

Instagram is profiting from several ads that invite people to create nonconsensual nude images with AI image generation apps, once again showing that some of the most harmful applications of AI tools are not hidden on the dark corners of the internet, but are actively promoted to users by social media companies unable or unwilling to enforce their policies about who can buy ads on their platforms.

While parent company Meta’s Ad Library, which archives ads on its platforms, who paid for them, and where and when they were posted, shows that the company has taken down several of these ads previously, many ads that explicitly invited users to create nudes and some ad buyers were up until I reached out to Meta for comment. Some of these ads were for the best known nonconsensual “undress” or “nudify” services on the internet.

It’s creepy and can lead to obsession, which can lead to actual harm for the individual.

I don’t think it should be illegal, but it is creepy and you shouldn’t do it. Also, sharing those AI images/videos could be illegal, depending on how they’re represented (e.g. it could constitute libel or fraud).

I disagree. I think it should be illegal. (And stay that way in countries where it’s already illegal.) For several reasons. For example, you should have control over what happens with your images. Also, it feels violating to become unwillingly and unasked part of the sexual act of someone else.

That sounds problematic though. If someone takes a picture and you’re in it, how do they get your consent to distribute that picture? Or are they obligated to cut out everyone but those who consent? What does that mean for news orgs?

That seems unnecessarily restrictive on the individual.

At least in the US (and probably lots of other places), any pictures taken where there isn’t a reasonable expectation of privacy (e.g. in public) are subject to fair use. This generally means I can use it for personal use pretty much unrestricted, and I can use it publicly in a limited capacity (e.g. with proper attribution and not misrepresented).

Yes, it’s creepy and you’re justified in feeling violated if you find out about it, but that doesn’t mean it should be illegal unless you’re actually harmed. And that barrier is pretty high to protect peoples’ rights to fair use. Without fair use, life would suck a lot more than someone doing creepy things in their own home with pictures of you.

So yeah, don’t do creepy things with other pictures of other people, that’s just common courtesy. But I don’t think it should be illegal, because the implications of the laws needed to get there are worse than the creepy behavior of a small minority of people.

Can you provide an example of when a photo has been taken that breaches the expectation of privacy that has been published under fair use? The only reason I could think that would work is if it’s in the public interest, which would never really apply to AI/deepfake nudes of unsuspecting victims.

I’m not really sure how to answer that. Fair use is a legal term that limits the “expectation of privacy” (among other things), so by definition, if a court finds it to be fair use, it has also found that it’s not a breach of the reasonable expectation of privacy legal standard. At least that’s my understanding of the law.

So my best effort here is tabloids. They don’t serve the public interest (they serve the interested public), and they violate what I consider a reasonable expectation of privacy standard, with my subjective interpretation of fair use. But I disagree with the courts quite a bit, so I’m not a reliable standard to go by, apparently.

Fair use laws relate to intellectual property, privacy laws relate to an expectation of privacy.

I’m asking when has fair use successfully defended a breach of privacy.

Tabloids sometimes do breach privacy laws, and they get fined for it.

Right, they’re orthogonal concepts. If something is protected by fair use laws, then privacy laws don’t apply. If privacy laws apply, then it’s not fair use.

The proper discussion in this area is around libel law. That’s where tabloids are usually sued, not for fair use or “privacy violations.” For a libel suit to succeed, the plaintiff must prove that the defendant made false statements that caused actual harm to the plaintiff’s reputation. There are a bunch of lawsuits going on right now examining deep fakes and similarly allegedly libelous use of an individuals likeness. For a specific example, look at the Taylor Swift lawsuit around deep fake porn.

But the crux of the matter is that you ain’t have a right to your likeness, generally speaking, and fair use laws protects creepy use of legally acquired representations of your likeness.

The Deepfake stuff is interesting from a legal standpoint, and that is essentially the topic of this thread. When Deepfake first became a thing, many companies (like Reddit) chose to ban the content; they did so voluntarily, perhaps a mix of morality and liability issues.

What I referred to regarding tabloids is that there have been many cases of paparazzi being fined for breaches of privacy, not libel. From my studies I recall a good example being “if you need a ladder to see over someone’s fence you are invading the expectation of privacy”. This was before drones were a thing so I don’t know how it applies these days.

I agree that you should have control of your likeness, but I don’t think it is as protected as your comment suggests.