Here is the official statement from OpenSUSE: https://news.opensuse.org/2024/03/29/xz-backdoor/

Is this only happened with SSH, or other network facing services using liblzma too?

The malicious code attempts to hook in to libcrypto, so potentially other services that use libcrypto could be affected too. I don’t think extensive research has been done on this yet.

SSH doesn’t even use liblzma. It’s pulling in the malicious code via libsystemd, which does use liblzma.

Edit: “crypto” meaning cryptography of course, not cryptocurrency.

We know that sshd is targeted but we don’t know the full extent of the attack yet.

Also, even aside from the attack code here having unknown implications, the attacker made extensive commits to liblzma over quite a period of time, and added a lot of binary test files to the xz repo that were similar to the one that hid the exploit code here. He also was signing releases for some time prior to this, and could have released a signed tarball that differed from the git repository, as he did here. The 0.6.0 and 0.6.1 releases were contained to this backdoor aimed at sshd, but it’s not impossible that he could have added vulnerabilities prior to this. Xz is used during the Debian packaging process, so code he could change is active during some kind of sensitive points on a lot of systems.

It is entirely possible that this is the first vulnerability that the attacker added, and that all the prior work was to build trust. But…it’s not impossible that there were prior attacks.

I will laugh out loud if the “fixed” binary contains a second backdoor, but one of better quality. It’s reminiscent of a poorly hidden small joint, which is naturally found, and then bargaining, apologizing and making amends begin. Although now it is generally not clear where the code is more proven.

If you’re using

xzversion 5.6.0 or 5.6.1, please upgrade asap, especially if you’re using a rolling-release distro like Arch or its derivatives. Arch has rolled out the patched version a few hours ago.Backdoor only gets inserted when building RPM or DEB. So while updating frequently is a good idea, it won’t change anything for Arch users today.

I think that was a precaution. The malicious build script ran during the build, but the backdoor itself was most likely not included in the resuling package as it checked for specific packaging systems.

No, read the link you posted:

Arch does not directly link openssh to liblzma, and thus this attack vector is not possible. You can confirm this by issuing the following command:

ldd "$(command -v sshd)"However, out of an abundance of caution, we advise users to remove the malicious code from their system by upgrading either way.

when building RPM or DEB.

Which ones? Everything I run seems to be clear.

https://access.redhat.com/security/cve/CVE-2024-3094

Products / Services Components State Enterprise Linux 6 xz Not affected Enterprise Linux 7 xz Not affected Enterprise Linux 8 xz Not affected Enterprise Linux 9 xz Not affected (and thus all the bug-for-bug clones)

Fedora 41, Fedora Rawhide, Debian Sid are the currently known affected ones AFAIK.

Those getting the most recent software versions, so nothing that should be running in a server.

Dang, Arch never sleeps, does it? That’s a 24/7 incident response squad level of support.

Gentoo just reverted back to the last tar signed by another author than the one seeming responsible for the backdoor. The person has been on the project for years, so one should keep up to date and possibly revert even further back than just from 5.6.*. Gentoo just reverted to 5.4.2.

Just updated on void and saw the same thing

Since the actual operation of the liblzma SSH backdoor payload is still unknown, there’s a protocol for securing your impacted systems:

• Consider all data, including key material and secrets on the impacted system as compromised. Expand the impact to other systems, as needed (for example: if a local SSH key is used to access a remote system then the remote system must be considered impacted as well, within the scope the key provides).

• Wipe the impacted host and reinstall it from scratch. Use known good install that does not contain the malicious payload. Generate new keys and passwords. Do not reuse any from the impacted systems.

• Restore configuration and data from backups, but from before the time the malicious liblzma package was installed. However, be careful not to allow potentially leaked credentials or keys to have access to the newly installed system (for example via $HOME/.ssh/authorized_keys).

This handles the systems themselves. Unfortunately any passwords and other credentials stored, accessed or processed with the impacted systems must be considered compromised as well. Change passwords on web sites and other services as needed. Consider the fact that the attacker may have accessed the services and added ways to restore access via for example email address or phone number in their control. Check all information stored on the services for correctness.

This is a lot of work, certainly much more than just upgrading the liblzma package. This is the price you have to pay to stay safe. Just upgrading your liblzma package and hoping for the best is always an option, too. It’s up to you to decide if this is a risk worth taking.

This recovery protocol might change somewhat once the actual operation of the payload is figured out. There might be situations where the impact could be more limited.

As an example: If it turns out that the payload is fully contained and only allows unauthorized remote access via the tampered sshd, and the host is not directly accessible from the internet (the SSH port is not open to internet) this would mean that it might be possible to clean up the system locally without full reinstall.

However, do note that the information stored on the system might have still been leaked to outside world. For example leaked ssh keys without a passphrase could still afford the attacker access to remote systems.

This is a long con, and honestly the only people at fault are the bad actors themselves. Assuming Jia Tan’s GitHub identity and pgp key weren’t compromised by someone else, this backdoor appears to be the culmination of three years of work.

could a Flatpak contain one of the backdoored builds of

xzorliblzma? Is there a way to check? Would such a thing be exploitable, or does this backdoor only affect ssh servers?The base runtime pretty much every Flatpak uses includes xz/liblzma, but none of the affected versions are included. You can poke around in a base runtime shell with

flatpak run --command=sh org.freedesktop.Platform//23.08or similar, and check your installed runtimes withflatpak list --runtime.23.08 is the current latest version used by most apps on Flathub and includes xz 5.4.6. 22.08 is an older version you might also still have installed and includes xz 5.2.12. They’re both pre-backdoor.

It seems there’s an issue open on the freedesktop-sdk repo to revert xz to an even earlier version predating the backdoorer’s significant involvement in xz, which some other distros are also doing out of an abundance of caution.

So, as far as we know: nothing uses the backdoored version, even if it did use that version it wouldn’t be compiled in (since org.freedesktop.Platform isn’t built using Deb or RPM packaging and that’s one of the conditions), even if it was compiled in it would to our current knowledge only affect sshd, the runtime doesn’t include an sshd at all, and they’re still being extra cautious anyway.

One caveat: There is an unstable version of the runtime that does have the backdoored version, but that’s not used anywhere (I don’t believe it’s allowed on Flathub since it entirely defeats the point of it).

ELI5 what does this mean for the average Linux user? I run a few Ubuntu 22.04 systems (yeah yeah, I know, canonical schmanonical) - but they aren’t bleeding edge, so they shouldn’t exhibit this vulnerability, right?

apt info xz-utils

Your version is old as balls. Even if you were on Mantic, it would still be old as balls.

Security through antiquity

The average user? Nothing. Mostly it just affects those who get the newest versions of everything.

In this case I think that’s just Fedora and Debian Sid users or so.

The backdoor only activates during DEB or RPM builds, and was quickly discovered so only rolling release distros using either package format were affected.

And you know what? Doing updates once a week saved me from updating to this version :)

I upgraded to 5.6.0-1 on the 28th Februar already. Over a month ago. On a server. That’s the first time Arch testing has fucked me so hard lol.

Having arch has benefits because of more up to date packages but ofc as it happened to you, it introduces more risks

You probably are fairly safe. Yeah, okay, from a purely-technical standpoint, your server was wide-open to the Internet. But unless some third party managed to identify and leverage the backdoor in the window between you deploying it and it being fixed, only the (probably state-backed) group who are doing this would have been able to make use of it. They probably aren’t going to risk exposing their backdoor by exploiting it on your system unless they believe that you have something that would be really valuable to them.

Maybe if you’re a critical open-source developer, grabbing your signing keys or other credentials might be useful, given that they seem to be focused on supply-chain attacks, but for most people, they probably just aren’t worth the risk. Only takes them hitting some system with an intrusion-detection system that picks up on the breakin, them leaving behind traces, and some determined person tracking down what happened, and they’ve destroyed their exploit.

Damn, it is actually scary that they managed to pull this off. The backdoor came from the second-largest contributor to xz too, not some random drive-by.

They’ve been contributing to xz for two years, and commited various “test” binary files.

It’s looking more like a long game to compromise an upstream.

Either that or the attacker was very good at choosing their puppet…

Well the account is focused on one particular project which makes sense if you expect to get burned at some point and don’t want all your other exploits to be detected. It looks like there was a second sock puppet account involved in the original attack vector support code.

We should certainly audit other projects for similar changes from other psudoanonymous accounts.

Yeah, and the 700 commits should be reverted… just in case we missed something.

That is a should be standard procedure

Time to audit all there contributions although it looks like they mostly contribute to xz. I guess we’ll have to wait for comments from the rest of the team or if the whole org needs to be considered comprimised.

It would be nice if we could press formal charges

there will be federal investigation

Do you have a source for this?

I don’t have a source but I think it is safe to say given the large corporations and government institutions that rely on XZ utils. I’m sure Microsoft, Amazon, redhat ect are in talks with the federal government about this

Source: they made it up

updated my post, it was just some speculation i misread

Assuming that it’s just that person, that it’s their actual name and that they’re in the US…

This is the best post I’ve read about it so far: https://boehs.org/node/everything-i-know-about-the-xz-backdoor

In the fallout, we learn a little bit about mental health in open source.

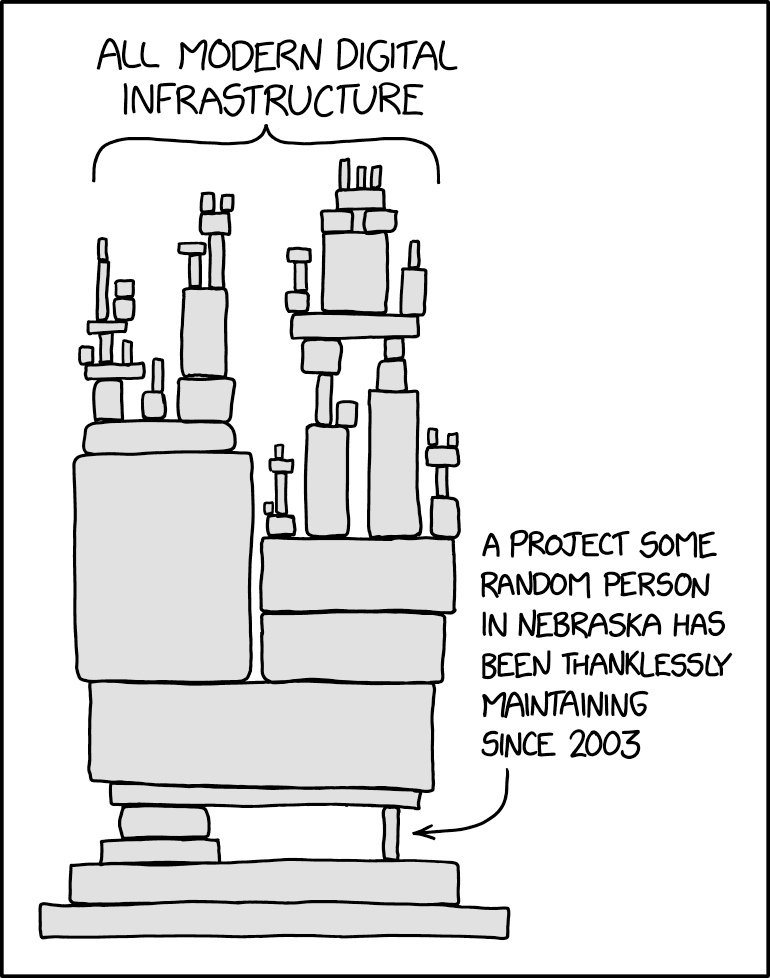

Reminded me of this, relevant as always, xkcd:

Yes, exactly.

And looking at you npm : npm

That whole timeline is insane, and the fact that anyone even found this in the totally coincidental way they did is very lucky for the rest of us.

Holly shit

This is a fun one we’re gonna be hearing about for a while…

It’s fortunate it was discovered before any major releases of non-rolling-release distros were cut, but damn.

That’s the scary thing. It looks like this narrowly missed getting into Debian and RH. Downstream downstream that is… everything.

This is pretty insane. Can’t wait for the Darknet Diaries on this one.

deleted by creator

Time to bring back the reproducible build hype

Won’t help here; this backdoor is entirely reproducible. That’s one of the scary parts.

The back door is not in the source code though, so it’s not reproducible from source.

Part of the payload was in the tarball. There was still a malicious shim in the upstream repo

The backdoor wasn’t in the source code, only in the distributed binary. So reproducible builds would have flagged the tar as not coming from what was in Git

Reproducible builds generally work from the published source tarballs, as those tend to be easier to mirror and archive than a Git repository is. The GPG-signed source tarball includes all of the code to build the exploit.

The Git repository does not include the code to build the backdoor (though it does include the actual backdoor itself, the binary “test file”, it’s simply disused).

Verifying that the tarball and Git repository match would be neat, but is not a focus of any existing reproducible build project that I know of. It probably should be, but quite a number of projects have legitimate differences in their tarballs, often pre-compiling things like autotools-based configure scripts and man pages so that you can have a relaxed

./configure && make && make installbuild without having to hunt down all of the necessary generators.Time to change that tarball thing. Git repos come with built in checksums, that should be the way to go.

Honestly, while the way they deployed the exploit helped hide it, I’m not sure that they couldn’t have figured out some similar way to hide it in autoconf stuff and commit it.

Remember that the attacker had commit privileges to the repository, was a co-maintainer, and the primary maintainer was apparently away on a month-long vacation. How many parties other than the maintainer are going to go review a lot of complicated autoconf stuff?

I’m not saying that your point’s invalid. Making sure that what comes out of the git repository is what goes to upstream is probably a good security practice. But I’m not sure that it really avoids this.

Probably a lot of good lessons that could be learned.

-

It sounds like social engineering, including maybe use of sockpuppets, was used to target the maintainer, to get him to cede maintainer status.

-

Social engineering was used to pressure package maintainers to commit.

-

Apparently automated software testing software did trip on the changes, like some fuzz-tesing software at Google, but the attacker managed to get changes committed to avoid it. This was one point where a light really did get aimed at the changes. That being said, the attacker here was also a maintainer, and I don’t think that the fuzzer guys consider themselves responsible for identifying security holes. And while it did highlight the use of ifunc, it sounds like it was legitimately a bug. But, still, it might be possible to have some kind of security examination taking place when fuzzing software trips, especially if the fuzzing software isn’t under control of a project’s maintainer (as it was not, here).

-

The changes were apparently aimed at getting in shortly before Ubuntu freeze; the attacker was apparently recorded asking and ensuring that Ubuntu fed off Debian testing. Maybe there needs to be more-attention paid to things that go in shortly before freeze.

-

Part of the attack was hidden in autoconf scripts. Autoconf, especially with generated data going out the door, is hard to audit.

-

As you point out, using a chain that ensures that a backdoor that goes into downstream also goes into git would be a good idea.

-

Distros should probably be more careful about linking stuff to security-critical binaries like sshd. Apparently this was very much not necessary to achieve what they wanted to do in this case; it was possible to have a very small amount of code that performed the functionality that was actually needed.

-

Unless the systemd-notifier changes themselves were done by an attacker, it’s a good bet that the Jia Tan group and similar are monitoring software, looking for dependencies like the systemd-notifier introduction. Looking for similar problems that might affect similar remotely-accessible servers might be a good idea.

-

It might be a good idea to have servers run their auth component in an isolated module. I’d guess that it’d be possible to have a portion of sshd that accepts incoming connections (and is exposed to the outside, unauthenticated world) as an isolated process. That’d be kind of inetd-like functionality. The portion that performed authentication (and is also running exposed to the outside) as an isolated process, and the code that runs only after authentication succeeds run separately, with only the latter bringing in most libraries.

-

I’ve seen some arguments that systemd itself is large and complicated enough that it lends itself to attacks like this. I think that maybe there’s an argument that some sort of distinction should be made between more- or less-security-critical software, and different policies applied. Systemd alone is a pretty important piece of software to be able to compromise. Maybe there are ways to rearchitect things to be somewhat more-resilient and auditable.

-

I’m not familiar with the ifunc mechanism, but it sounds like attackers consider it to be a useful route to hide injected code. Maybe have some kind of auditing system to look for that.

-

The attacker modified the “in the event of an identified security hole” directions to discourage disclosure to anyone except the project for a 90-day embargo period, and made himself the contact point. That would have provided time to continue to use the exploit. In practice, perhaps software projects should not be the only contact point – perhaps it should be the norm to both notify software projects and a separate, unrelated-to-a-project security point. That increases the risk of the exploit leaking, but protects against compromise of the project maintainership.

-

Not exactly - it was in the source tarbal available for download from the releases page but not the git source tree.

Probally more SLSA which reproducible builds is apart of

Why didn’t this become a thing? Surely in 2024, we should be able to build packages from source and sign releases with a private key.

It’s becoming more of a thing but a lot of projects are so old that they haven’t been able to fix their entire build process yet