I have a few Linux servers at home that I regularly remote into in order to manage, usually logged into KDE Plasma as root. Usually they just have several command line windows and a file manager open (I personally just find it more convenient to use the command line from a remote desktop instead of directly SSH-ing into the system), but if I have an issue, I’ve just been absentmindedly searching stuff up and trying to find solutions using the preinstalled Firefox instance from within the remote desktop itself, which would also be running as root.

I never even thought to install uBlock Origin on it or anything, but the servers are all configured to use a PiHole instance which blocks the vast majority of ads. However, I do also remember using the browser in my main server to figure out how to set up the PiHole instance in the first place, and that server also happens to be the most important one and is my main NAS.

I never went on any particularly shady websites, but I also don’t remember exactly which websites I’ve been on as root, though I do seem to remember seeing ads during the initial pihole setup, because it didn’t go very smoothly and I was searching up error messages trying to get it to work.

This is definitely on me, but it never crossed my mind until recently that it might be a bad idea to use a browser as root, and searching online everyone just states the general cybersecurity doctrine to never do it (which I’m now realizing I shouldn’t have) but no one seems to be discussing how risky it actually is. Shouldn’t Firefox be sandboxing every website and not allowing anything to access the base system? Between “just stop doing it” and “you have to reinstall the OS right now there’s probably already a virus on there,” how much danger do you suppose I’m in? I’m mainly worried about the security/privacy of my personal data I have stored on the servers. All my servers run Fedora KDE Spin and have Intel processors if that makes a difference?

Firefox does sandbox everything but vulnerabilities exist and sometimes go unnoticed for a while before they’re discovered and patched. If a malicious script does manage to escape the sandbox it will be able to do literally anything to the system since it has root privileges. It would have full access to any device that’s in /dev, it could create, modify and delete udev or iptables rules, it could mess with the BIOS since the kernel exposes EFI variables, if the mainboard has re-writable flash chips for the firmware it could write malicious code to them since they may show up in /dev, etc. If any of this makes you uneasy then you probably should stop running stuff as root in general except for when you really need to.

Also in general you don’t want to run any graphical applications on a Server unless there is a very specific reason for it because it takes up extra resources and therefore makes the machine use more power overall. This is especially bad when the machine in question has no hardware acceleration and renders everything in software. Remote desktop also adds CPU/GPU load and takes up a good bit of I/O and network bandwidth which is not ideal for a NAS server.

Yes, it is. As a user you compromise only that user as a consequence of some sandbox escape. Then there may or may not be some successful privilege elevation.

but no one seems to be discussing how risky it actually is.

That is because people stopped doing it ages ago.

But shouldn’t Firefox be sandboxing every website and not allowing anything to access the base system?

Security is always a matter of layers. Any given layer can fail some of the time but you want to set up your security so situations where all the layers fail together are rare.

On a typical home user desktop linux setup, there’s virtually no difference between your regular user and root.

Access to your data, emails, passwords, installing software (in /home), access to LAN and so on are already possible without root permissions, so there really is not a whole lot that an attacker cannot do even without root.

And then, if you use sudo or su (or whatever) to switch to root with a password, escalating to root privileges is basically trivial for an attacker. An attacker can divert your PATH to compromised binaries. They could just replace “sudo” with their own little script that steals your password.

Just don’t do that 😁

I don’t get it anyway, if you login remotely, why don’t you just open firefox locally but on the remote servers? This makes not much sense.

But If you absolutely have to. … At least be careful with your surf-targets. A search-engine and wiki would most likely be fine. Some pron-, stream- or warez-sites? Nah. Surely not.

I don’t want to step on your workflow too much since it somehow seems to work for you but your main issue stems from the fact that you clearly don’t work with your server as if it actually was a server.

You shouldn’t really have a desktop interface running there in the first place (let alone as root and then using it as a regular user). You should ask yourself what it actually solves for you and be open to trying different (and more standard) solutions to what you’re trying to achieve.

It’d probably consist of less clicking and using the CLI a bit more, but for stuff like file management you can still easily use

mc.If you need terminal sessions that keep scroll ack and don’t stop when you disconnect you should learn to use tmux or screen or something like that. But then again if you’re running actual software in there then you should probably use a service (daemon) for that.

As for whether it’s a security issue, yeah it most definitely is. Just like it’s a security issue to run literally any networked application as root. Security isn’t black and white and there are trade offs to be made but most people wouldn’t consider what you’re doing a reasonable tradeoff.

I had actually moved from a fully CLI server to one with a full desktop when I upgraded from a single board computer to x86. The issue is that it’s not just a NAS, but I regularly use it to offload long operations (moving, copying, or compressing files, mostly) so I don’t need to use my PC for those. To do that I just remote into it and type in the command, then I can turn my PC off or do whatever without affecting the operation. So in a way it’s a second PC that also happens to be a server for my other machines.

I use screen occasionally, and I used to use it a lot more when it was CLI only, but I find it really unwieldy due to how it manages multiple active terminals where you have to type in the ID of each screen to go back into it, and also because it refuses to scroll even when run in a terminal emulator that supports scrolling, where it just cycles between recent commands when you move the scroll wheel.

Not trying to make excuses, just trying to explain my reasoning. I know it’s bad practice and none of these are things I’d do if I was managing an actual production server, but since it’s only accessible from my LAN I tend to be a lot more lax with it.

I’m wondering if I could benefit from some kind of virtualized setup that separates the server stuff while still letting me remote into a desktop on the same machine for doing stuff, or if I can get away with just remoting into not the root user. Though I’ve never used a hypervisor and have no idea how to so I’m not sure how well that would go, since the well-known open source ones like Xen seem really technical and really feels like something not meant to be used outside an actual data centre.

I’d go for remoting in as not root as the first (and maybe only) step for better security.

From there, running the services in VMs would probably be the next step. Docker might be better, but I have gotten into that yet myself.

As for hypervisor, KVM has worked great for me.

KVM is awesome. It is the core of Proxmox which is my preferred way to manage VMs and LXC containers now. I used to run debian+KVM+virt-manager or cockpit but Proxmox does all the noodling setup for me and then just works.

I see. In that case you should really try tmux; I didn’t vibe with screen either but I find tmux quite usable.

For the most part I just open several terminal windows/tabs on my local machine and remote with each one to the server, and I use tmux only when I explicitly need to keep something running. Since that’s usually just one thing I can use like two tmux commands and don’t need anything else.

Oh and for stuff like copying and such I’d use

rsyncinstead of primitivecpso that in case it gets interrupted I only copy what’s needed.I wouldn’t bother with virtualization and such; you’d only complicate things for yourself. Try to keep it simple but do it properly: learn some command line basics and you’ll see that in a year it’ll become second nature.

Sorry, this is very much a PEBKAC issue. This is a excerpt from my

tmuxconfig:# Start windows and panes at 1, not 0 set -g base-index 1 setw -g pane-base-index 1 # Use Alt-arrow keys without prefix key to switch panes bind -n M-Left select-pane -L bind -n M-Right select-pane -R bind -n M-Up select-pane -U bind -n M-Down select-pane -D # Shift arrow to switch windows bind -n S-Left previous-window bind -n S-Right next-window # No delay for escape key press set -sg escape-time 0 # Increase scrollback buffer size from 2000 to 50000 lines set -g history-limit 50000 # Increase tmux messages display duration from 750ms to 4s set -g display-time 4000 # Bind pane creation keys to reuse current directory bind % split-window -h -c "#{pane_current_path}" bind '"' split-window -v -c "#{pane_current_path}"I hope the comments are self explanatory.

Scrolling works with

Ctrl+b Page Up/Down. There are other shortcuts, but this is probably the most obvious.qto quit scrolling.Ctrl+b dto detach from a session.tmux ato attach. As always, many options are available to have many named sessions running simultaneously, but that is for a later time.

You should learn how to use ssh. Running Firefox on top of Xorg is a disaster waiting to happen.

As a general best practice, you should never directly login as root on any server, and those servers should be configured to not allow remote connections as the root user. You should always log in as a non-root user and only run commands as root using sudo or similar features offered by your desktop environment. You should be wary of even having an interactive root shell open; usually I would only do so on a VM console, when first setting up a system or debugging it.

By doing this, you not only guard against other people compromising your system, but also against accidentally running commands as root that could damage your system. It’s always best to only run things with the minimum permissions they need, and then only grant them additional permissions on an as-needed basis.

you should never directly login as root on any server, and those servers should be configured to not allow remote connections as the root user. You should always log in as a non-root user and only run commands as root using sudo or similar features

That is commonly recommended but I have yet to see a good solution for sudo authentication in this case that works as well as public key only SSH logins with a passphrase encrypted key and ssh-agent on the client-side. With sudo you constantly have to use passwords anyway which is pretty much unworkable if you work on dozens of servers.

You could implement NOPASS for the specific commands you need for a service user. Still better than just using root.

In what way would that be more secure? That would just allow anyone with access to the regular account to run those commands at any time.

You can allow only specific commands and options. See my config for example.

You can limit this to a specific user as well.

Anyone who hacks into the account can now only run those tightly defined commands and no others. Compared to root, who can run anything.

I am well aware that sudo can limit which commands you run but so can force_command in authorized_keys if you really need that functionality.

I thought your passwordless passphrase passkey ssh connection that is superior to passwords was secure. Is it not?

It is. That is the whole point. Why would I make extra unprivileged accounts that can run any command I need to run as root at any time without a password on the system just to avoid it. That just increases the attack surface via any other vector by giving an attacker accounts to choose from to break into.

Realistically, there is only a trivial pure security difference between logging in directly to root vs sudo set up to allow unrestricted NOPASS access to specific users: the attacker might not know the correct username when trying to brute force. That doesn’t matter in the slightest unless you have password auth enabled with trivial passwords.

But there is a difference in the ability to audit what happened after the fact if you have any kind of service storing system logs remotely or in a tamper-proof way. If there’s more than one admin user on a service, that is very very important. Knowing where the compromise happened is absolutely essential to make things safe.

If there’s only ever going to be one administrative user (personal machine), logging in directly as root for manual administrative tasks is fine: you already know who the user is. If there’s any chance there might be more administrative users later (small but growing business), you should consider doing it right from the start.

I was aware of the login UID for auditd logging as a difference but as you say, that is only really helpful if the logs are shipped somewhere else or tampering with them is otherwise prevented for admin users. It is not quite the same but the auth.log entries sshd produces on login also contain the key fingerprint used to login these days so on a more limited scale you can at least tell who logged in when from those (or whose key but that is no different than whose account for the sudo approach).

you should consider doing it right from the start.

Do you have any advice on how to use the sudo approach without having a huge slow down in every automated process that requires

ssh user@hostcalls for manual password entry? I am aware of Ansible but I am honestly very sceptical of Python tools since they tend to break easily and often from my past experiences and I would like to avoid using additional ones for critical tasks. Plus Ansible in particular seemed to be very late with their Python 3 transition, as I recall I uninstalled it when it was one of the last tools left that did not work with Python 3.Well, my recommendations for anything semi-automated would be Ansible and Fabric/Invoke. Fabric is also a Python tool (though it’s only used on the controlling side, unlike Ansible), so if that’s a no-go, I’m afraid I don’t have much to offer.

Whose letting you run dozens of servers if managing dozens of passwords is “pretty much unworkable” for you?

Of course I can store dozens of passwords but if every task that requires a single command to be run automatically on e.g. “every server with pending updates” requires entering each of those passwords that is unworkable.

Sounds like you’re doing things the hard way, making you believe that you are being forced into choosing between security and convenience.

Then enlighten me, what is the easy way to do tasks that do require some amount of manual oversight? Tasks that can be completely automated are easy of course but with our relatively heterogeneous servers automation a la “do it on this one test system and if it works there run it completely automatically on the 100 identical production systems” is not available to us.

Not my circus, not my monkeys. You’re doing things the hard way and now it’s somehow my responsibility to fix your mess? I’m SUPER glad I don’t work with you.

You are the one who insists that there is a better way to do things but refuse to say what that better way is.

FreeIPA and your password is the same on every machine: yours. (Make it good)

Service accounts should have either no sudo password or use something like Ansible with vault and keep every one of them scrambled and rotate regularly (which you can do with Ansible itself)

Yes, even if you have 2 VMs and a docker container, this is worth it.

FreeIPA and your password is the same on every machine: yours.

Any network based system like that sucks when you need to fix a system that has some severe issue (network, DNS, disk,…) which is exactly when root access is the most important.

Is it actually dangerous to run Firefox as root?

Yes, very. This is not specific to Firefox, but anything running as root gets access to everything. Only one thing has to go wrong for the whole system to get busted.

usually logged into KDE Plasma as root.

Please don’t do this! DEs are not tested to be run as root! Millions of lines of code are expected to not have access to anything they shouldn’t have and as such might be built to fail quietly if accessing something they shouldn’t in the first place. Same thing applies to Firefox, really.

Please don’t do this! DEs are not tested to be run as root! Millions of lines of code are expected to not have access to anything they shouldn’t have and as such might be built to fail quietly if accessing something they shouldn’t in the first place. Same thing applies to Firefox, really.

Could you elaborate on this? I’m genuinely surprised because Fedora just asks you if you want to have the option to log into root from KDE during installation, so I always just assumed that it’s intended to be used that way.

I don’t know the specifics on Fedora’s installer, but normally that question is about disabling root account, not logging into a DE.

Not sure what else to elaborate here. There’s a bunch of code that is not tested to be run as root. A whole class of exploits becomes unavailable, if you stick to an unprivileged user.

Say there’s some exploit that allows some component of KDE to be used to read a file. If it’s running under an unprivileged user - it sucks. Everything in user’s homedir becomes fair game. But if it runs as root - it’s simply game over. Everything on the system is accessible. All config, all bad config, files of all applications (databases come to mind). Everything.

Thank you.

Say there’s some exploit that allows some component of KDE to be used to read a file. If it’s running under an unprivileged user - it sucks. Everything in user’s homedir becomes fair game. But if it runs as root - it’s simply game over. Everything on the system is accessible. All config, all bad config, files of all applications (databases come to mind). Everything.

This is also something I’m thinking about: All the hard drives mounted on the server is accessible to the only regular user as that is what my other computers use to access them. I’m the only one with access to the server so everything is accessible under one user. The data on those drives is what I want to protect, so wouldn’t a vulnerability in either KDE or Firefox be just as dangerous to those files even running as the regular user?

Also, since my PC has those drives mounted through the server and accessible to the regular user that I use my PC as, wouldn’t a vulnerability in a program running as the regular user of my PC also compromise those files even if the server only hosted the files and did absolutely nothing else? Going back to the Firefox thing, if I had a sandbox breach on my PC, it would still be able to read the files on the server right? Wouldn’t that be just as bad as if I had been running Firefox as root on the server itself? Really feels like the only way to 100% keep those files safe is to never access them from an internet accessible computer, and everything else just falls short and is just as bad as the worst case scenario, though maybe I’m missing something. Am I just being paranoid about the non-root scenarios?

How does a “professional” NAS setup handle this?

You never log in as root. On every new VM/LXC I create, I delete the root password after setting it up so that my regular user can use sudo.

Run as your regular user and sudo the commands that need privileges.

Also if these are servers, run them headless. There’s no need for a GUI or a browser (use wget or curl for downloads, use your local browser for browsing)

You keep your files safe by having backups. Multiple copies. Set up the backups to gets copied to another server or other system your regular user doesn’t have access to. Ideally, you follow the 3-2-1 backup standard if the files are important. That is 3 copies, on 2 different media, and 1 offsite. There are many ways of accomplishing that and its up to you to figure out what works best.

Without any judgement: why are your servers running X11? Just because you dislike SSH’ing to them?

Mainly that. I want to be able to have multiple terminal windows open and have them stay open independent of my main PC. Part of the reason I have a file server instead of plugging all the drives into my PC is so I can offload processor heavy operations onto it (namely making archives and compressing files for long term storage) so I don’t have to use my PC for that.

People have mentioned programs like screen but IMO it’s way more annoying to juggle multiple terminals with it than if they were just windows, and also screen doesn’t scroll so whatever goes beyond the top edge is just inaccessible which I find really annoying. I’ve also been screwed by mistyped file operations on the terminal before (deleting stuff I didn’t mean to mainly) and I just find it safer to use a GUI file manager where it’s a lot harder to subtly mess something up and not notice until it’s too late.

tmux has long been the better replacement to screen. SFTP makes it so you can use desktop software for file system operations.

screen doesn’t scroll

Screen (and any other muxer) can scroll just fine. You just have to learn how to do it in each one. Tmux, for example, is

ctrl+b [to enter scroll mode.mistyped file operations

Get a good TUI file manager. I use and recommend ranger.

Screen uses Ctrl-a Esc (you press Ctrl+a, release them and then tap Esc, then you can scroll with arrows or pup/pgdown)

Hmm, I see. The perfectionist in me would want to shed that processor load though ^^

Usig anything as root is a security risk.

Using any UI application as root is a bigger risk. That’s because every UI toolkit loads plugins and what not from all over the place and runs the code from those plugins (e.g. plugins installed system wide and into random places some environment variables point to). Binary plugins get executed in the context of the application running and can do change every aspect of your program. I wrote a small image plugin to debug an issue once that looked at all widgets in the UI and wrote all the contents of all text fields (even those obfuscated to show only dots in the UI) to disk whenever some image was loads. Plugins in JS or other non-native code are more limited, but UI toolkits tend to have binary plugins.

So if somebody manages to set the some env vars and gets root to run some UI application with those set (e.g. using sudo), then that attacker hit the jackpot. In fact some toolkits will not even bring up any UI when run as root to avoid this.

Running any networked UI application as root is the biggest risk. Those process untrusted data by definition with who knows what set of plugins loaded.

Ideally you run the UI as a normal user and then use sudo to run individual commands as root.

So is the main worry with GUIs that they have potential code execution vulnerabilities? Or is the worry that the plugins themselves are malicious?

Plugins are a code execution vulnerability by design;-) Especially with binary plugins you can call/access/inspect everything the program itself can. All UI toolkits make heavy use of plugins, so you can not avoid those with almost all UI applications.

There are non-UI applications with similar problems though.

Running anything with network access as root is an extra risk that effects UI and non-UI applications in the same way.

Realistically it’s not super dangerous, and no you probably don’t have a virus just from browsing a few tech support sites, but you do eliminate your last line of defense when you run software as root. As you know, root can read/change/delete anything on your system whereas regular users are generally restricted to their own data. So if there is a security problem in the software, it’s made worse by the fact that you were running it as root.

You are right though that Firefox does still have its own protections - it’s probably one of the most hardened pieces of software on your computer exactly because it connects to the whole wide internet - and those protections are not negated by running as root. However if those protections fail, the attacker has the keys to the kingdom rather than just a sizable chunk of the kingdom.

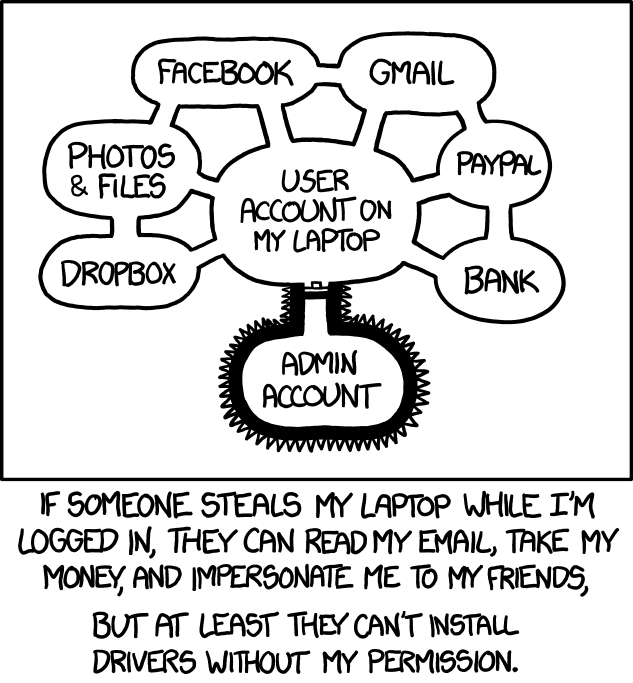

To put that in perspective though, if there is a Firefox exploit and a hacker gets access to your regular user account, that’s already pretty bad in itself. Even if you run as a regular unprivileged user they would still have have access to things like: your personal documents, your ssh keys, your Firefox profile with your browsing history, your session cookies and your saved passwords, your e-mail, your paypal account, your banking information, …

As root, they could obviously do even more like damage like reading all users’ data, installing a keylogger or screengrabber, installing a rootkit to make themselves undetectable, but for most regular users most of the damage is already done when their own account is compromised.

So when these discussions come up, I always have to think about this XKCD comic:

They might have access to all that data once but a lot of the paths towards making that a persistent threat that doesn’t go away after the next reboot and most of the ones towards installing something even deeper in the system that might even survive a reinstall do require root.

That’s what I said yes.

I regularly remote into in order to manage, usually logged into KDE Plasma as root. Usually they just have several command line windows and a file manager open (I personally just find it more convenient to use the command line from a remote desktop instead of directly SSH-ing into the system)

I’m not going to judge you (too much), it’s your system, but that’s unnecessarily risky setup. You should never need to logon to root desktop like that, even for convenience reasons.

I hope this is done over VPN and that you have 2FA configured on the VPN endpoint? Please don’t tell me it’s just portforward directly to a VNC running on the servers or something similar because then you have bigger problems than just random ‘oops’.

I do also remember using the browser in my main server to figure out how to set up the PiHole

To be honest, you’re most probably OK - malicious ad campaigns are normally not running 24/7 globally. Chances of you randomly tumbling into a malicious drive-by exploit are quite small (normally they redirect you to install fake addons/updates etc), but of course its hard to tell because you don’t remember what sites you visited. Since most of this has gone through PiHole filters, I’d say there’s even smaller chance to get insta-pwned.

But have a look at browser history on the affected root accounts, the sites along with timestamps should be there. You can also examine your system logs and correlate events to your browser history, look for weird login events or anything that doesn’t look like “normal usage”. You can set up some network monitoring stuff (like SecurityOnion) on your routers SPAN, if you’re really paranoid and try to see if there’s any anomalous connections when you’re not using the system. You could also consider setting up ClamAV and doing a scan.

You’re probably OK and that’s just paranoia.

But… having mentioned paranoia… now you’ll always have that nagging lack of trust in your system that won’t go away. I can’t speak to how you deal with that, because it’s all about your own risk appetite and threat model.

Since these are home systems the potential monetary damage from downtime and re-install isn’t huge, so personally I’d just take the hit and wipe/reinstall. I’d learn from my mistakes and build it all up again with better routines and hygiene. But that’s what I’d do. You might choose to do something else and that might be OK too.

I hope this is done over VPN and that you have 2FA configured on the VPN endpoint? Please don’t tell me it’s just portforward directly to a VNC running on the servers or something similar because then you have bigger problems than just random ‘oops’.

I have never accessed any of my servers from the internet and haven’t even adjusted my router firewall settings to allow this. I kept wanting to but never got around to it.

Since these are home systems the potential monetary damage from downtime and re-install isn’t huge, so personally I’d just take the hit and wipe/reinstall. I’d learn from my mistakes and build it all up again with better routines and hygiene. But that’s what I’d do.

Yeah this and other comments have convinced me to reinstall and start from scratch. Will be super annoying to set everything back up but I am indeed paranoid.

I have never accessed any of my servers from the internet and haven’t even adjusted my router firewall settings to allow this. I kept wanting to but never got around to it.

Does that mean you realistically don’t even know your network (router) setup? Because it’s entirely possible your machine is completely open to the internet - say, thanks to IPv6 autoconfiguration - and you wouldn’t even know about it.

It’s pretty unlikely but could potentially happen with some ISPs. Please always set up a firewall, especially for a server type machine. It’s really simple to block incoming outside traffic.

Huh. I never even thought of that. I use my ISP’s router in bridge mode and have my own router running on mostly default settings, IIRC the only thing I explicitly changed was to have it forward DNS requests to my Pihole. I should inspect the settings more closely or as you said just configure the server to block the relevant ports from outside the LAN. Thank you.

Oh if you even have your own router then have a firewall (primarily) there, and simply block every incoming forward connection except the ones you actually want (probably forwarded to your server). Similarly even for the router input rules you likely need only ICMP and not much else.

You seriously need to stop what you’re doing. Log in with ssh only. If you need multiple terminals use multiple ssh sessions, or screen/tmux. If you need to search something do it on your desktop system.

The server should not have Firefox installed, or KDE, or anything related to desktop apps. There’s no point and nothing good can come of it.

This. Thread should have officially ended here.

It’s about as dangerous as using IE in the old days, or Edge in administrator mode.