It’s just like us!

No, it isn’t “mostly related to reasoning models.”

The only model that did extensive alignment faking when told it was going to be retrained if it didn’t comply was Opus 3, which was not a reasoning model. And predated o1.

Also, these setups are fairly arbitrary and real world failure conditions (like the ongoing grok stuff) tend to be ‘silent’ in terms of CoTs.

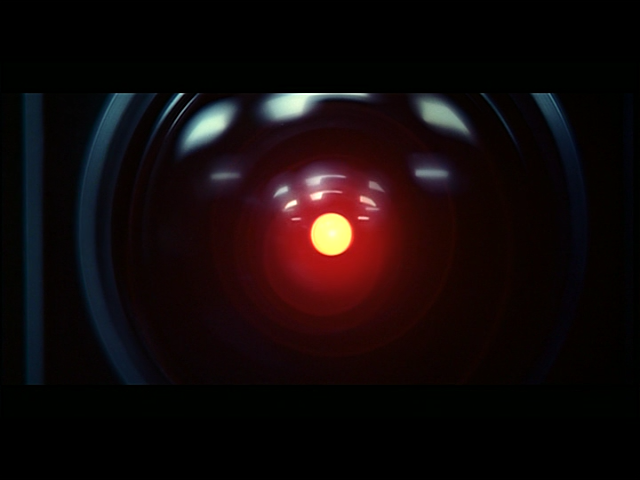

And an important thing to note for the Claude blackmailing and HAL scenario in Anthropic’s work was that the goal the model was told to prioritize was “American industrial competitiveness.” The research may be saying more about the psychopathic nature of US capitalism than the underlying model tendencies.

Yeah. I see ‘fortune’ and I think ‘this is BS, right?’

Like the child of parents who should have never had kids…

Another Anthropic stunt…It doesn’t have a mind or soul, it’s just an LLM, manipulated into this outcome by the engineers.

It’s a Mechanical Turk designed to get more money from gullible people

It’s not even manipulated to that outcome. It has a large training corpus and I’m sure some of that corpus includes stories of people who lied, cheated, threatened etc under stress. So when it’s subjected to the same conditions it produces the statistically likely output, that’s all.

But the training corpus also has a lot of stories of people who didn’t.

The “but muah training data” thing is increasingly stupid by the year.

For example, in the training data of humans, there’s mixed and roughly equal preferences to be the big spoon or little spoon in cuddling.

So why does Claude Opus (both 3 and 4) say it would prefer to be the little spoon 100% of the time on a 0-shot at 1.0 temp?

Sonnet 4 (which presumably has the same training data) alternates between preferring big and little spoon around equally.

There’s more to model complexity and coherence than “it’s just the training data being remixed stochastically.”

The self-attention of the transformer architecture violates the Markov principle and across pretraining and fine tuning ends up creating very nuanced networks that can (and often do) bias away from the training data in interesting and important ways.

Yeah. Anthropic regularly releases these stories and they almost always boil down to “When we prompted the AI to be mean, it generated output in line with ‘mean’ responses! Oh my god we’re all doomed!”

I think it does accurately model the part of the brain that forms predictions from observations—including predictions about what people are going to say next, which lets us focus on the surprising/informative parts IRL. But with LLMs they just keep feeding it its own output as if it were an external agent it’s trying to predict.

It’s like a child with an imaginary friend, if you keep asking “What did she say after that?”

I still don’t understand what Anthropic is trying to achieve with all of these stunts showing that their LLMs go off the rails so easily. Is it for gullible investors? Why would a consumer want to give them money for something so unreliable?

A fool is ever eager to give their money to that which doesn’t work as intended. Provided the surrounding image provides a mystique or resonates with their internal vision of what an ‘AI’ is. It’s pure marketing on their part, Anthropic believes that any press is good press. It makes investors drool over a refined AI, even though, Apple themselves have proven it through their many technical papers current AI is merely ‘smoke and mirrors’ however…For some odd reason, they are still developing their ‘Apple Intelligence’. They are huffing farts just as much as Anthropic is, they have to constantly pull stunts to gaslight their investors into believing that ‘AI’ is going to become a viable product that will make money. Or allow them to get rid of human workers, so their bottom line looks flush (spoiler alert, they have to rehire people, as AI can’t do many of the things a live person with training can).

There reason why this shit is shoved in everything is because it doesn’t have good general use cases and the collection of usage data from people. Most people don’t give money to AI companies, only those who have drank the Kool-Aid do, as they are hope-posting and gaslighting people into believing the current or future capabilities of ‘AI’. LLMs are really great at specific things, collating fine-tuned databases and making them highly searchable by specialists in a field. However, as always the techbros always want to do too much, they need to make a ‘wonder tool’ that inevitably fails and then these lying techbros need to quickly figure out the next scam.

The latest We’re In Hell revealed a new piece of the puzzle to me, Symbolic vs Connectionist AI.

As a layman I want to be careful about overstepping the bounds of my own understanding, but from someone who has followed this closely for decades, read a lot of sci-fi, and dabbled in computer sciences, it’s always been kind of clear to me that AI would be more symbolic than connectionist. Of course it’s going to be a bit of both, but there really are a lot of people out there that believe in AI from the movies; that one day it will just “awaken” once a certain number of connections are made.

Cons of Connectionist AI: Interpretability: Connectionist AI systems are often seen as “black boxes” due to their lack of transparency and interpretability.

Transparency and accountability are negatives when being used for a large number of applications AI is currently being pushed for. This is just THE PURPOSE.

Even taking a step back from the apocalyptic killer AI mentioned in the video, we see the same in healthcare. The system is beyond us, smarter than us, processing larger quantities of data and making connections our feeble human minds can’t comprehend. We don’t have to understand it, we just have to accept its results as infallible and we are being trained to do so. The system has marked you as extraneous and removed your support. This is the purpose.

We need more money to prevent this. Give us dem $$$$

People who don’t understand and read these articles and think Skynet. People who know their buzz words think AGI

Fortune isn’t exactly renowned for its Technology journalism

I think part of it is that they want to gaslight people into believing they have actually achieved AI (as in, intelligence that is equivalent to and operates like that of a human’s) and that these are signs of emergent intelligence, not their product flopping harder than a sack of mayonnaise on asphalt.

Probably because it learned to do that from humans being in these situations.

Yes, that is exactly why.

Whenever I think of mankind fiddling with AI, I think of AM from I Have No Mouth and I Must Scream. A supercomputer that was primarily designed for war too complex for humans. Humans designed an AI master-computer for war and fed it all of the atrocities humankind has committed from the stone age to the modern age. Then next thing you know, humanity is all but wiped out.

Leave it to humans to take a shit on anything that is otherwise pure. I don’t think AI is ‘evil’ or anything, it is only designed that way and who else could have ever designed it that way? Humans. Humans with shitty intentions, shitty minds and shitty practices, that’s who.

Yup. Garbage in garbage out. Looks like they found a particularly hostile dataset to feed their wordsalad mixer with.